Jens Axboe

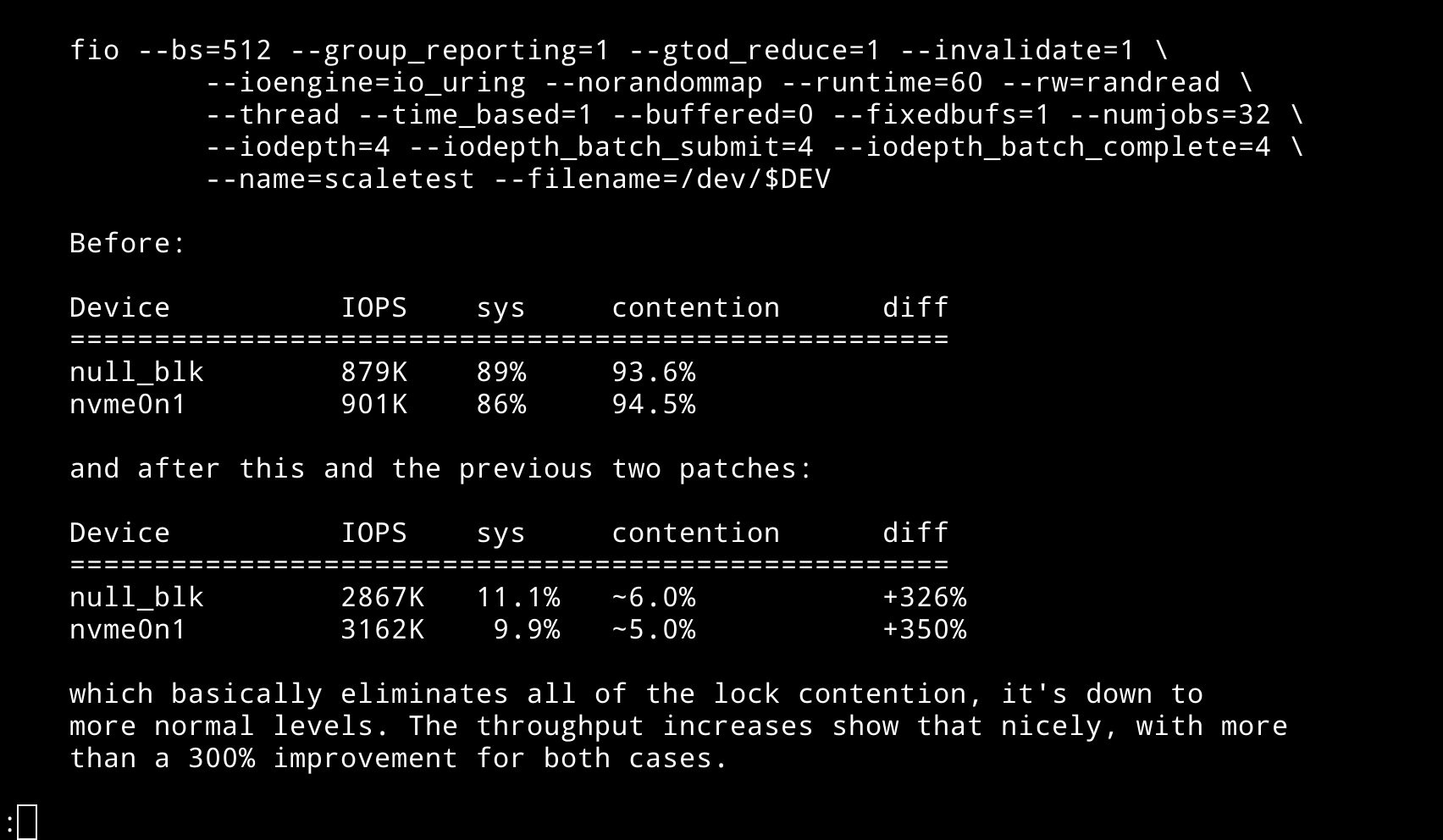

axboe@fosstodon.org"mq-deadline doesn't scale!" complaints are apparently rampant, so I spent a bit of time looking into that and wrote a few patches to address the basics. 100+% improvement, obviously the complaints were right.

https://lore.kernel.org/linux-block/20240118180541.930783-1-axboe@kernel.dk/

Now waiting for folks to poke holes in it.

Jens Axboe

axboe@fosstodon.orgThis, again, was low hanging fruit and if some folks spent less time complaining and actually used a day or two to look into the issue, then it could've been improved years ago.

Holger Hoffstätte

asynchronaut@fosstodon.org@axboe Gave it a try - booted fine but got a hard lockup after a few minutes doing random desktop things. Sorry, no stacktrace.

Jens Axboe

axboe@fosstodon.org@asynchronaut Thanks for testing! Lockup as in the whole system froze (and wasn't pingable), or as in stalled disk access? I would not be surprised if there are a few gaps in my logic there, I'll take another look and spend a bit of time ensuring it's sane.

Jens Axboe

axboe@fosstodon.org@asynchronaut Forgot to ask, can you let me know what storage devices you are using? Might help in narrowing down what this might be.

Holger Hoffstätte

asynchronaut@fosstodon.org@axboe Started an app that wanted to read and write at the same time, which stalled first the app and then the entire system (probably writeback piling up or something). Didn't try ping but the mouse was still moving. Zen2 Thinkpad with internal NVME drive (WDC PC SN730). This was on top of the timestamp stuff, which seems stable by itself.

Jens Axboe

axboe@fosstodon.org@asynchronaut OK thanks that's useful, I'll run some testing and do some mental ordering gymnastics when I get home. The timestamp stuff should be solid for sure, this one I would be more surprised if it didn't have a hitch or two. I'll look and cut a v2 once I'm happy that it should be solid.

Jens Axboe

axboe@fosstodon.org@asynchronaut I think I see it, can you try with an updated patch 2? It's in the indicated branch:

https://git.kernel.dk/cgit/linux/log/?h=block-deadline

Patch 1 is unchanged.

Holger Hoffstätte

asynchronaut@fosstodon.org@axboe Updated & have been running it all morning without problems. 🚀

Will continue and maybe roll out to more machines..all 2 of them :)

Jens Axboe

axboe@fosstodon.org@asynchronaut Good to hear! I made further improvements, I'll send out a v2 this morning.

Jens Axboe

axboe@fosstodon.orgWe're done here, contention totally gone, improvement now +300% for both cases. I believe it's weekend time.

Josh Triplett

josh@joshtriplett.orgJens Axboe

axboe@fosstodon.org@josh For the nvme device, no scheduler will run at 5.1M IOPS, or device limits. So obviously still faster if you care about raw performance, but for most things I doubt you'd notice at this point.

Jens Axboe

axboe@fosstodon.orgThanks to Bart, he greatly improved my patch 3. Branch updated here, if you want to peruse the patches:

Jens Axboe

axboe@fosstodon.orgDid the same for BFQ, which was capped at 569K IOPS with 96% lock contention and 86% sys time. Now runs at 1550K IOPS with ~30% lock contention, and 14.5% sys time. Patch here:

Linus Torvalds

torvalds@axboe that ‘spin_trylock_irq()’ is still potentially quite expensive in the failure case.

The trylock itself is probably fine, since it does

int val = atomic_read(&lock->val);

if (unlikely(val))

return 0;so it’s just a single read for the contended case (sill potentially causing cacheline bounces, of course, but not nearly as much as trying to get the lock). But the irq part means that we end up doing

local_irq_disable(); \

raw_spin_trylock(lock) ? \

1 : ({ local_irq_enable(); 0; }); \so for the “I didn’t get the lock” case you end up with that extra “disable and re-enable interrupts”.

That’s all because we don’t make each spinlock variation make their own irq-safe version, so we wrap the trylock around the whole thing.

And that bfq code seems to make this worse by doing this whole thing twice, both for bfq_bic_try_lookup() and bfq_merge().

The locking around ioc_lookup_icq() in particular looks odd, in that the function itself claims to be RCU-safe, but then it also requires that q->queue_lock, making a shambles of the whole RCU thing.