Jonathan Corbet

corbetThere are many things I would like to do with my time. Defending LWN from AI shitheads is rather far from the top of that list. I *really* don't want to put obstacles between LWN and its readers, but it may come to that.

(Another grumpy day, sorry)

Tristan Colgate-McFarlane

tmcfarlane@toot.community@corbet @lwn this, combined with search engines prioritising the stolen content!

This is why I think the web is genuinely doomed. It's not enough to steal the content, for search engines to kill click thtoughs and ad revenues, they are literally killing the ability of original authors to serve the traffic to the few real users that might want to see it.

Devastating.

FoxyLad

foxylad@mastodon.nz

wuffel

wuffel@social.tchncs.deThis helped me a lot with my little projects:

https://codeberg.org/skewray/htaccess

Alonely0 🦀 🇪🇺

Alonely0@mastodon.social@corbet @lwn at this point we might as well be offensive. If the client seems even slightly sus, just send them gibberish data talking about how good Chihuahua muffins are. Ideally LLM-generated (yes, gross) because this doesn't add new information (linear algebra yay) and makes models collapse (aka AI inbreeding).

Dec [{( )}]

)}]

dec23k@mastodon.ie

@cadey @corbet @lwn

I recently saw a traffic spike to a small HTML-only website that never had WP on it, but was suddenly getting failed wp-admin logins and hundreds of PHP vuln scans, non stop. All from MSFT IP addresses. Abuse reports were sent, but there was no response, and the abuse kept happening.

So now I'm blocking every MSFT CIDR block that I can find, server-wide.

Jonathan Corbet

corbetIt may yet come to that, though.

Jani Nikula

jani@floss.socialMaybe it doesn't need to be subscriber only, just registered users only? Which can also be a PITA, but if there's no enshittification for non-registered users other than the bandwidth being shared with bots, maybe it's tolerable? Could even have a banner about this explaining the benefits of registering, and how LWN won't sell your data.

Jonathan Corbet

corbetThat and, of course, the fact that everybody starts as an unregistered user. As long as we can avoid making the experience worse for them, I think we should.

Jani Nikula

jani@floss.socialYeah, it's hard to argue against that.

And maybe you weren't seeking for "helpful" advice anyway, but, uh, you know your audience. :)

Jonathan Corbet

corbetMartin Roukala (né Peres)

mupuf@social.treehouse.systems@corbet @lwn @jani @suihkulokki I have a simple solution: Stop being so damn relevant!!!

Wait... 🤡

Jani Nikula

jani@floss.social@mupuf @corbet @lwn @suihkulokki

I don't think the scrapers care about that, though.

Martin Roukala (né Peres)

mupuf@social.treehouse.systems@jani @corbet @lwn @suihkulokki Sorry, I was being too optimistic... I was thinking they wanted sources with high SNR... But you are probably right...

AUSTRALOPITHECUS 🇺🇦🇨🇿

lkundrak@metalhead.club@corbet @lwn @jani @suihkulokki one day the photocopiers will get busy after the office hours again, but this time it's going to be linux weekly news instead of the punk fanzines

David Gerard

davidgerard@circumstances.run@corbet @lwn @jani @suihkulokki for RationalWiki I've had to resort to a mandatory JavaScript trick that sets a cookie. Unfortunately it seems to block Googlebot, but it's down to (a) human users can use the site (b) nobody can use the site including Googlebot.

Tobias

krono@toot.berlin@dec23k @cadey @corbet @lwn I have a tiny site. SSH moved to a different port.

I see hundreds of locing attempts a day. I receive a summary via logwatch. Nearly every day I'm blocking whole /24 or even /16.

I rely on fail2ban to mitigate suche Webserver DDOS, but maybe thats not enough.

How do you detect those spikes?

Ben Tasker

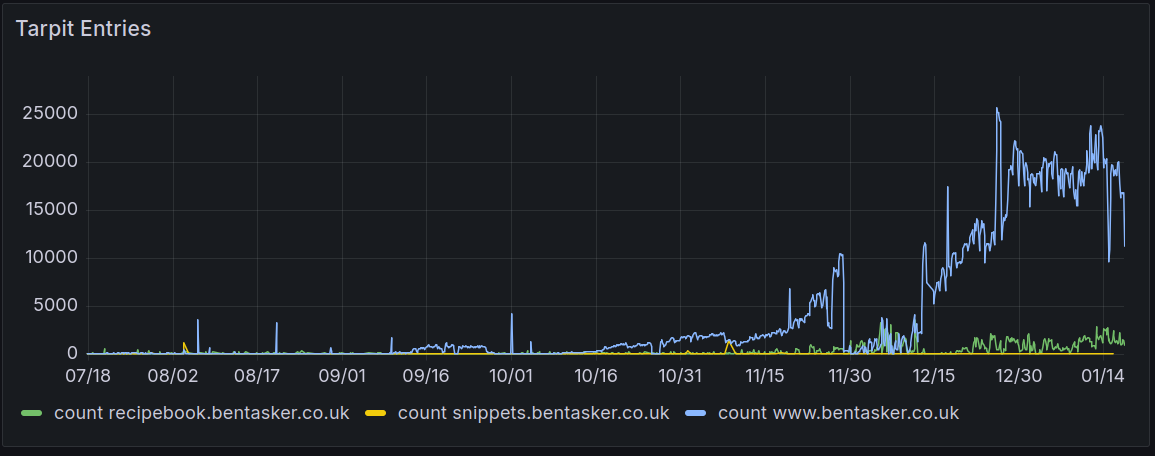

ben@mastodon.bentasker.co.uk@sbb @corbet @lwn Yep, I've also had a big bump in traffic over the last couple of months (despite levels already having been elevated because of AI scraper activity).

Happily though, it looks like a lot of them have been falling into my LLM tarpit.

I think those figures are under-reporting too - I've also seen a significant rise in the number of 5xx status codes, suggesting my tarpit container might not be keeping up

trademark

trademark@fosstodon.org@corbet Instead of making it worse for unregistered users, how about sharding the site with recent frequently accessed content separate from old long-tail? Easier to keep a small site in cache.

Jonathan Corbet

corbettrademark

trademark@fosstodon.org@corbet Can you say what the problem is? CPU, contention in unshardeable database?

Jonathan Corbet

corbettrademark

trademark@fosstodon.org@corbet Yeah, you're running python code? Other option is to try a JIT or Cython, but if you value your time it might be more expensive to debug that than to pay for the extra CPU :(

mx alex tax1a - 2020 (6)

atax1a@infosec.exchange@dec23k @cadey @corbet @lwn @davidgerard we have a program that hooks into nginx on one end and freebsd blocklistd on the other and when someone hits up PHP endpoints on our sites that havent had PHP on them in over 20 years, they get firewalled. sure as shit, most of them come from azure. also we host our own email and most of the spam that makes it through our filters comes from google. makes u think dot jpeg