Hello, if you care about Servo, the alternative web browser engine that was originally slated to be the next gen Firefox but now is a donation-supported project under the Linux Foundation, you should know Servo announced a plan to as of June start allowing Servo code to be written by "Github Copilot":

https://floss.social/@servo/114296977894869359

There's a thoughtful post about this by the author of the former policy, which until now banned this:

https://kolektiva.social/@delan/114319591118095728

FOLLOWUP: Canceled! https://mastodon.social/@mcc/114376955704933891

There has been a public comment period on this plan, with public comment so far overwhelmingly negative. I'm not sure how this response will impact the plan; the announcement said enactment was "subject to a review of your feedback", whatever that means.

Personally I think the plan is a horrible idea on both technical and moral grounds, and would end Servo's current frontrunner status as the best candidate for a browser that truly represents the open web and respects the needs of its own users.

Please do not respond to this thread to talk about Ladybird. This is a personal request from me. Just… please. Don't make me have a conversation about Ladybird today. Some other time

Thad

Thad@brontosin.space@mcc I'm extremely skeptical, but you're right, it's a thoughtful post; it sounds like what they're proposing for now is a trial period with some fairly strict limitations. I think that changes my reaction from "this is a terrible idea" to "this is probably a waste of time but at least it sounds like it's a well-controlled experiment."

Guess we'll see.

@Thad I would describe delan's post as thoughtful but not the proposed policy. I think the proposed policy is unacceptable whether it is an experiment or not .

GreenDotGuy

DarcMoughty@infosec.exchange@mcc I'm not a developer, but I was filing tickets on Firefox since the beginning of Mozilla, so I'm not a random n00b either.

It seems to me that a 'greenfield layout engine in a new language' is exactly where AI shouldn't be used, but I could see it being acceptable for supportive parts of a Servo-based browser, like GUI busywork and DE integration.

@DarcMoughty Because every part of a computer program has the ability to impact every other, it is my opinion you cannot confine the poison that is randomly-generated¹ code to just one part of a program.

One part of the Servo TSC proposal suggests they could limit random text generation¹ to the documentation, which seems to me like if anything an even worse place to use it than the code.

¹ "Generative AI"

ShadSterling

ShadSterling@mastodon.social@mcc I got the impression that it was basically one person who wanted to contribute slop; isn’t the license one where they could make a fork and do whatever they want in their fork?

(I’m sure they wouldn’t name their fork “Slopo”, but I bet it would be called that)

@ShadSterling I mean, I'm hoping the reverse is an option if they go through with allowing slop

@mcc well, it's written in rust right? so in theory it should be safe right 😏

Stefan Scholl

Stefan_S_from_H@mastodon.social@mcc when I read the announcement on Mastodon, I immediately unfollowed them and deleted my local Servo folder.

@mcc Are there cases where you would not find LLMs objectionable for code assistance? Just to craft an arbitrary example, if the choice was between fewer unit tests or more tests with LLMs, are fewer tests always preferable?

@aeva theoretically Rust should be better than languages like C at containing problems in code to the local part of the codebase where the problem resides… but also there's a limit on this, insofar as Rust can *more* dependent in general on its memory invariants being preserved, so if the code is actually *partially random* as opposed to being merely buggy…

@73s I do not think there is an appropriate use for large language model technology. In the world.

Where there are existing ML approaches that can be useful, such as speech recognition, predictive text keyboards, or basic machine translation, I believe the LLM approach makes them worse.

@73s One problem is even if one chose to ignore or argue away the technical limitations of LLM software, there would still be a moral cost to doing business with the LLM companies, who perform their training in the worst ways possible. This moral cost should be considered especially serious for a project like Servo, since after all if ethics and user freedom turn out not to be a big deal, then why are we not just using Chrome?

@mcc I wonder how often rust "vibe coding" opts into the unsafe features of the language

@aeva one hopes never, but I don't think an LLM can actually be taught to follow project policies strictly! Some projects could address this by telling the linter to ban unsafe, but servo has a friggin JavaScript vm so they probably can't do that, at least not for the entire codebase.

@mcc Thanks for the answer. The moral part has been hard for me to completely come to terms with. It does feel odd that it's been trained on anything I've ever uploaded to github. To me it feels less of an issue if it's used to generate more open source code in turn, but I can't really provide a reasonable justification for that.

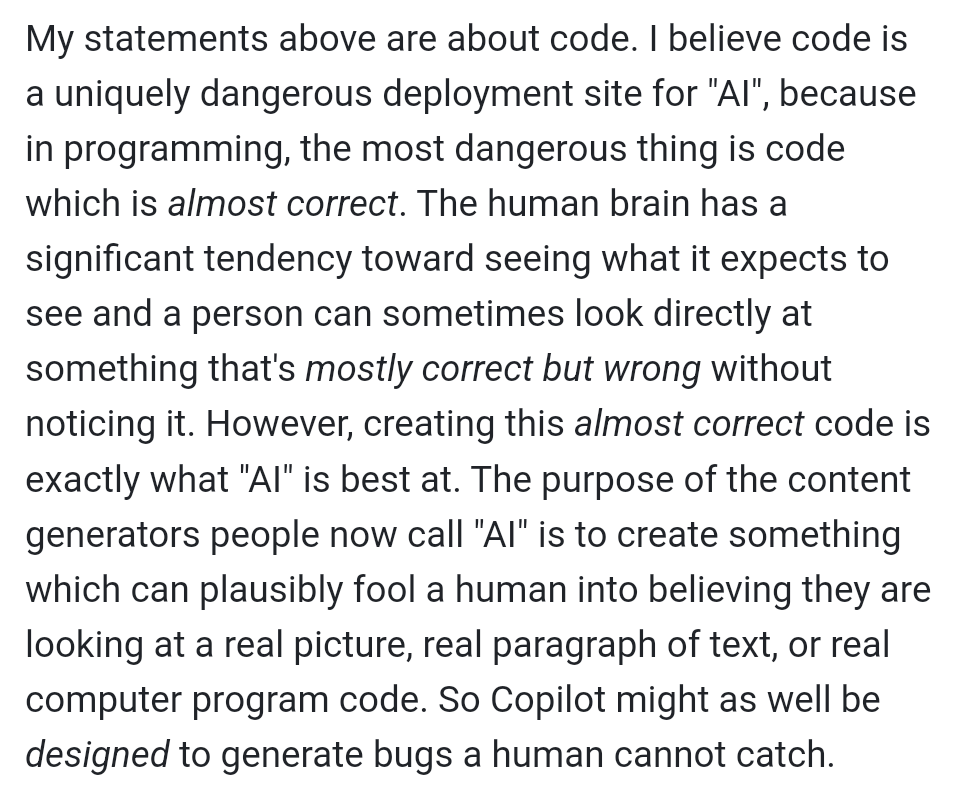

By the way, I still think this paragraph I wrote is a pretty good paragraph.

@73s I thought I was contributing to a commons on the grounds of, you can use my stuff for whatever as long as you follow these minimal conditions I set. Now Microsoft decided "open source" means "everything you wrote is public domain and the decisions you made about licensing are irrelevant"

Power_to_the_People (he/him)

PttP@mastodon.social@mcc Not a coder*, so maybe I shouldn't pipe up, but imho that's a <b>very good</b> paragraph. Well done!

*Not a coder, but halfway decent with logic

Gastropod

Bigshellevent@mastodon.social@mcc I'd extend this to say I'm worried about anything that are superficially believable, for anyone who are not an expert at that area, but can act with the believable advice.

For example. I will catch AI making almost correct shit up about failing to differentiate a few common skin pathogens. However if it made up shit about statistical method in a epidemiology study, I won't pick it up

The concern is with believable sounding falsery, I might act on it

@mcc only thing it was good for was making me feel less alone. like a pair programming house cat.

@lritter So I will admit that in the past, I have had pair programming sessions where the only function the other member of the "pair" was serving was keeping me awake. It was at that point roughly 4 AM

@lritter My pair partner in this particular story was an electrical engineer with no experience with executable "programming", so pretty much his function was I explained everything I did as I did it and if I said anything that was actively nonsensical he'd notice. A rubber duck could have served the same purpose, but if I *knew* it was a rubber duck, maybe I'd have started cheating and stopped making sense

James Widman

JamesWidman@mastodon.social@mcc thank you for this!

i guess this points at a use-case for "ai": it can generate a puzzle where the goal is to find a bug.

...except that "ai" is not even very good for that, bc if you want a puzzle that has a known bug, it helps if the author of the puzzle knows what the bug is ahead of time.

It probably also helps if it's the kind of bug that already bit the author, whereas an "ai" may generate bugs that no human being would accidentally make.

Tom Forsyth

TomF@mastodon.gamedev.place@mcc One of the guys at work - an excellent coder - decided to really dive into AI to see what it was actually good for, despite his starting assumption that it was a load of rubbish. It's been about 6 months of use now?

His main takeaway so far is exactly this. It can produce extremely plausible code, and often the code does "work". But you have to watch it very closely because it makes a lot of subtle mistakes, and some of them can cause bugs that would take days to find.

Cheeseness

Cheeseness@mastodon.social@mcc I thought your entire post was well written and on point.

I don't use GitHub these days, but I'm glad to see others sharing things that align with my outlooks. Thank you for taking the time/effort!

Nasado

nasado@tech.lgbtRichard Hendricks

hendric@astronomy.city@mcc what's your copyright on this? Would like to share in the office?

Michael Horne

recantha@mastodon.social@mcc Plausible but not correct, spot on. I've been using a GitHubs CoPilot for a few months. Some things it's very good at. Well one thing: pattern recognition. So if I'm doing something repetitive but not really, it's good at predicting "the next thing". But honestly? I can type faster than it can return results most of the time, so is it *really* helpful? Sometimes, I guess, but I'm having to be like a hawk reading what it's spat out!

pandabutter

pandabutter@plush.city@TomF @mcc The situation is similar for translation IMO (at least for popular language pairs): LLMs actually increase the need for human oversight *by a professional.* Their results can be grammatically sound, fluent, even colloquial- but still fundamentally wrong, because they convey something different than the source. Other forms of machine translation can garble source meaning, but LLMs can put words in people's mouths.

@pandabutter @TomF yeah i prefer pre-LLM translation tools because when pre-LLM translation tools failed it was "loudly"; even an untrained human could usually see it was gibberish. which is better than making something up…

pandabutter

pandabutter@plush.city@TomF @mcc One of my teachers did an interesting exercise where we translated something and *then* compared our versions to ChatGPT. Her point was that we can improve our *own* translations by using elements we liked from the LLM version, and that doing so will increase our lexicon and make *us* better translators.

I generally agree, but I don't actually do this. LLMs being useful *in this one context* doesn't absolve them of ethical and environmental concerns.

VildaVedo

VildaVedo@mastodon.arch-linux.cz

I hope we will see this soon: https://ladybird.org/

Thomas Depierre

Di4na@hachyderm.io@mcc smack in the middle of my analysis too.

Too many people seem to not realise that the target moved from "making a ML system that is right" to "making a ML system that human feels is right" and that it was the main advance.

Dusty Attic

dustyattic@kolektiva.social@mcc I was reading your response to the Servo proposal the other day and it reminded me of Ursula Franklin's arguments, that harmful technology can be successfully opposed only by making it a moral issue not a technical (pragmatic) one.

While the later approach might be useful at the time, things can be often made "good enough" while never addressing actual harms.

@dustyattic @73s I am not familiar with Ursula Franklin but apparently live practically down the street from a high school named after her…

If I were curious about her writings, where should I start? She seems more likely to have written a book than a blog post.

Owlor

Owlor@meow.social@mcc Oh god, this is what I feel as an artist about using AI for art too, it's practically designed to introduce the exact sort of mistakes that's really hard to catch cus everything LOOKS mostly correct. The main difference is that when this happens with art, you just get art that looks kind of bad, but when this happens to code, you can easily fuck up the entire week for a lot of people.

@Owlor Yeah, when code is wrong in *just one place* that *just one place* can cause the rest of the code to act incorrectly as well.

Richard Hendricks

hendric@astronomy.city@mcc Sorry, I meant are you ok with us sharing it outside Masto, and do you want credit for it.

Moos-a-dee 🫎

Moosader@mastodon.gamedev.place@Moosader @hendric Thanks— the paragraph was from my response to the Servo-TRC "AI" proposal on Github. https://github.com/servo/servo/discussions/36379#discussioncomment-12752707 it's fine to reshare it in part or in full, I would prefer if you credit me in some way. (I guess if you're going to put it in a commercial publication come back and let's have another discussion? Not used to thinking about licensing terms on forum posts lol)

VildaVedo

VildaVedo@mastodon.arch-linux.cz

@mcc Okay, you may edit posts and alert users directly in the post.

It's kind of strange, isn't it?

Hoshino Lina (星乃リナ) 🩵 3D Yuri Wedding 2026!!!

lina@vt.social@mcc @Owlor This is how unsafe code works in Rust, the point is to limit the danger zones to just those blocks... but a subtly wrong unsafe block can still cause the whole program to be UB. That's why we have humans write and check them. It works because it's not a lot of code so it's feasible to analyze and (at least informally) prove correct.

I dread the idea of LLMs writing the kind of subtle unsafe{} stuff that you end up using in real Rust projects because LLMs absolutely cannot reason about the subtleties of safety, invariants, etc., especially in more complex codebases.

@zer0unplanned I'm sorry, I don't think I understand the subject of this post.

@mcc I do want to say that they're not literally designed to "create something which can plausibly fool a human." Although some may be intentionally using them that way, they weren't actually designed to do this. Just, unfortunately, the way they work does produce this particular result. If anything it was almost more by accident that they work so well at tricking people. Had people been more resistant or the results just a little less able to fool people who don't know better, they would have remained a niche thing, and it's sort of too bad it didn't work out more that way.

But your fundamental point is spot on. Anything it produces -- especially in anything as complex as code -- is going to make it harder for the brain to actually look at it and figure out what is wrong.

David Nash

dpnash@c.im@nazokiyoubinbou @mcc > Had people been more resistant or the results just a little less able to fool people who don't know better, they would have remained a niche thing, and it's sort of too bad it didn't work out more that way.

I unintentionally but successfully inoculated myself against the hype by giving ChatGPT, shortly after it came out, a very simple request: "Describe the extrasolar planets around <<fake ID for star that doesn't exist>>."

I verified with a quick internet search that the fake ID didn't appear in any web sites or was easily confused with real ones that might appear in legitimate data. As far as I have been able to tell, nobody on the internet has ever used that fake ID before. It wasn't going to be in any LLM's training data.

I honestly was not prepared for what actually happened.

Instead of telling me something like "I don't know anything about that" or "Your guess is as good as mine, maybe check <<popular space science web site names>>", ChatGPT came back with multiple paragraphs of unadulterated bullshit. Complete fabrications of the orbital details and locations of nonexistent planets around a nonexistent star. I knew immediately what I was dealing with at that point, and have never been even slightly tempted to use it for anything except poking fun at "AI" hype.

Glitzersachen

glitzersachen@hachyderm.io> I verified with a quick internet search that the fake ID didn't appear in any web sites

This time is officially over now ... For every wrong "fact", you'll find a SEO website that has it on it's "AI" generated pages.

sabik

sabik@rants.au@glitzersachen @nazokiyoubinbou @mcc

And SEO website is the best case; for a lot of wrong "facts", there are actively malicious generated pages

E.g. if LLMs tend to use some non-existent software library, a library of that name now exists with a malicious payload

Edit: see also https://mastodon.social/@skry/114322252687542408

Elias Mårtenson

loke@functional.cafe@sabik @glitzersachen @nazokiyoubinbou @mcc I wanted to know if anyone had created a log4j logger that did message deduplication.

I did a duckduckgo search for this (without ai answers) and got sent to a blog post that explained not only that indeed such a library exists, but also explained how to configure it.

I didn't pay any initial attention to the inconsistencies in the setup instructions (such as explaining that you have to add a reference to a specific class, and then the example code not mentioning this class), but I started looking for the library itself.

I didn't exist. At all. Not with a different name, nor was there any reference to any deduplication library out there that did anything remotely what was described. There was a library with a similar name, but it was unrelated.

Apparently someone created a blog, explaining how different libraries work, and it's all slop.

That's what I realised that it doesn't matter how carefully I stay away from using these slop generators. I will still waste time on this crap because others believe they can profit from it somehow.

Tom Forsyth

TomF@mastodon.gamedev.place@mcc It is useful for some things. It's good at pointing at bits of an API you don't know very well. It's quite good at spotting some bugs (though also has false positives, i.e. reports bugs that aren't bugs). And simple boilerplate is not bad - but again you have to watch it like a hawk.

So far he does not recommend it unless you're exploring a codebase/API you don't know well. We'll see.

C++ Wage Slave

CppGuy@infosec.spaceIn desperation, because the official documentation has let me down and I can't find what I need on the Web, I've resorted to trying two LLMs for exactly this purpose: navigating my way through a large class suite in three languages I don't know for a work project that's needed in a hurry. So far, #DeepSeek has hallucinated multiple language features that simply don't exist and has suggested six approaches, five of which were obviously wrong and the other of which, when I coded it up, simply didn't work.

#Copilot, meanwhile, simply said, "that can't be done." When I asked, "Can I do it like this?" it replied, "Yes, that would work."

It's the first time I've tried to use a large language model for anything that really mattered, and it's even worse than I thought it would be.

If you boosted the above thread please see this follow-up from the Servo project.

https://floss.social/@servo/114375866413715822

I am looking forward to continuing work on my TUI-based Servo frontend.

h3mmy

h3mmy@tech.lgbt

@mcc

I ran into an issue at work recently where the underlying issue ended up being someone who used AI autocomplete and didn't notice how wrong it was because "it made the errors go away". I educated the person on why they should disable that autocomplete, but I expect issues like this to become more prevalent and it makes me sad that is the case.

@h3mmy I am afraid to get a job in the tech industry again because I wouldn't want to work anywhere unless copilot was banned, for this exact reason, but no where seems to actually ban copilot.