Posts

174Following

390Followers

314Michał "rysiek" Woźniak · 🇺🇦

rysiek@mstdn.socialAre you fucking kidding me?

Jack Dorsey is a main track speaker at FOSDEM this year:

https://fosdem.org/2025/schedule/event/fosdem-2025-4507-infusing-open-source-culture-into-company-dna-a-conversation-with-jack-dorsey-and-manik-surtani-block-s-head-of-open-source/

🤮

I am at a loss of words. I also owe sincere apologies to everyone flagging issues with FOSDEM over the years, whose concerns I often somewhat minimized. I am sorry, you were all right and I was wrong.

FOSDEM is now explicitly platforming AI/blockchain bro fascists. 🤬

👉 Edit: Drew DeVault is organizing a sit-in, and explains why: https://drewdevault.com/2025/01/16/2025-01-16-No-Billionares-at-FOSDEM-please.html

Maximilian Hils

max@fedi.hi.ls mitmproxy 11.1 is out! 🥳

mitmproxy 11.1 is out! 🥳

We now support *Local Capture Mode* on Windows, macOS, and - new - Linux! This allows users to intercept local applications even if they don't have proxy settings.

On Linux, this is done using eBPF and https://aya-rs.dev/, more details are at https://mitmproxy.org/posts/local-capture/linux/. Super proud of this team effort. 😃

Ciara

CiaraNi@mastodon.green‘There’s no greys, only white that’s got grubby. And sin, young man, is when you treat people like things, including yourself. That’s what sin is.’

‘It’s a lot more complicated than that—’

‘No. It ain’t. When people say things are a lot more complicated than that, they means they’re getting worried they won’t like the truth. People as things, that’s where it starts.’

‘Oh, I’m sure there are worse crimes—’

‘But they STARTS with thinking about people as things ...’

Sophie Schmieg

sophieschmieg@infosec.exchangeIn case you do not know how GenAI works, here is a very abridged description:

First you train your model on some inputs. This is using some very fancy linear algebra, but can be seen as mostly being a regression of some sorts, i.e. a lower dimensional approximation of the input data.

Once training is completed, you have your model predict the next token of your output. It will do so by creating a list of possible tokens, together with a rank of how good of a fit the model considers the specific token to be. You then randomly select from that list of tokens, with a bias to higher ranked tokens. How much bias your random choice has depends on the "temperature" parameter, with a higher temperature corresponding to a less biased, i.e. more random selection.

Now obviously, this process consumes a lot of randomness, and the randomness does not need to be cryptographically secure, so you usually use a statistical random number generator like the Mersenne twister at this step.

So when they write "using a Gen AI model to produce 'true' random numbers", what they're actually doing is using a cryptographically insecure random number generator and applying a bias to the random numbers generated, making it even less secure. It's amazing that someone can trick anyone into investing into that shit.

Jon Sterling

jonmsterling@mathstodon.xyzThe era of ChatGPT is kind of horrifying for me as an instructor of mathematics... Not because I am worried students will use it to cheat (I don't care! All the worse for them!), but rather because many students may try to use it to *learn*.

For example, imagine that I give a proof in lecture and it is just a bit too breezy for a student (or, similarly, they find such a proof in a textbook). They don't understand it, so they ask ChatGPT to reproduce it for them, and they ask followup questions to the LLM as they go.

I experimented with this today, on a basic result in elementary number theory, and the results were disastrous... ChatGPT sent me on five different wild goose-chases with subtle and plausible-sounding intermediate claims that were just false. Every time I responded with "Hmm, but I don't think it is true that [XXX]", the LLM responded with something like "You are right to point out this error, thank you. It is indeed not true that [XXX], but nonetheless the overall proof strategy remains valid, because we can [...further gish-gallop containing subtle and plausible-sounding claims that happen to be false]."

I know enough to be able to pinpoint these false claims relatively quickly, but my students will probably not. They'll instead see them as valid steps that they can perform in their own proofs.

Wolfie Christl

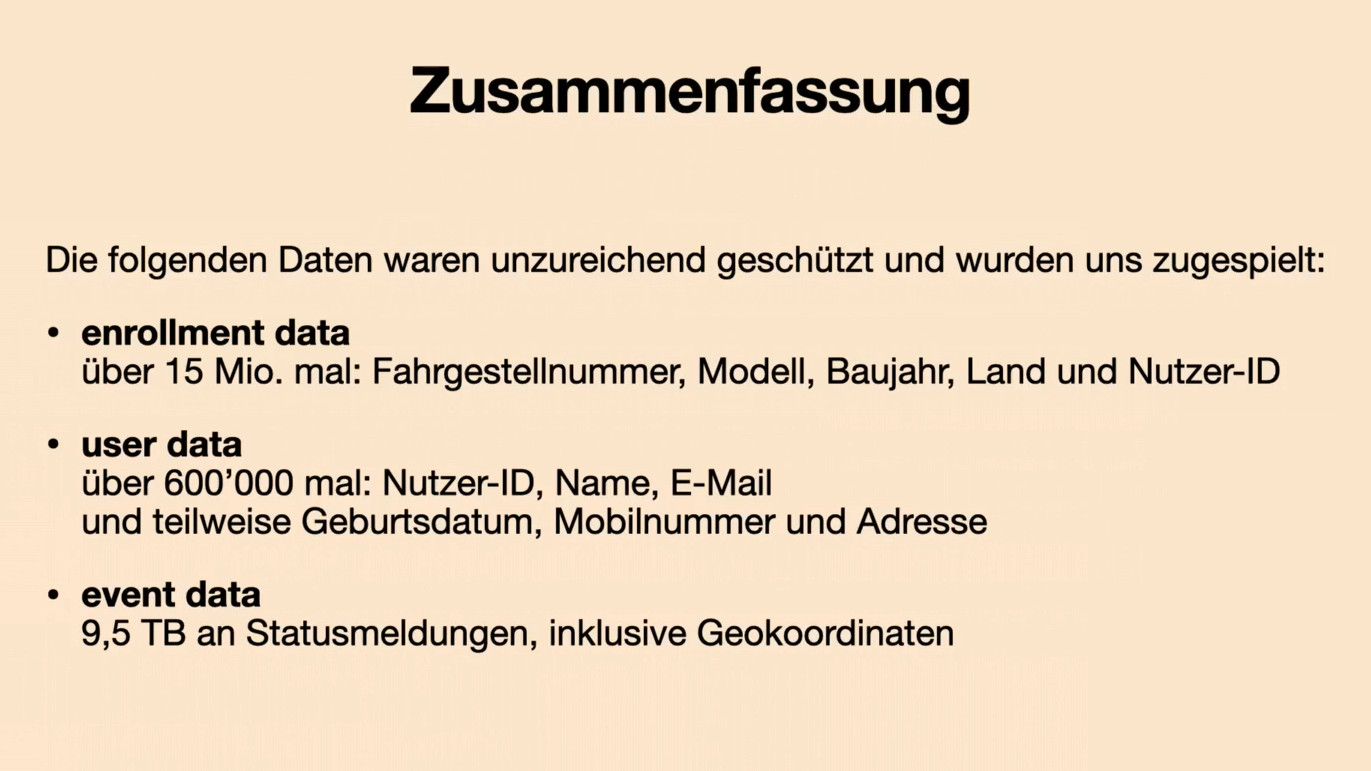

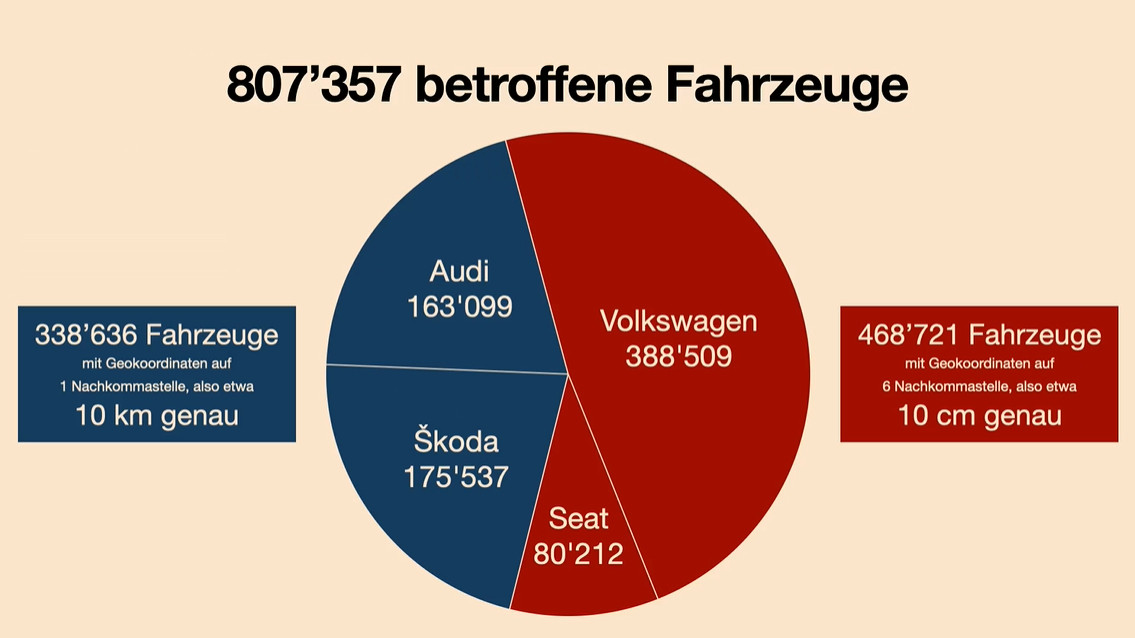

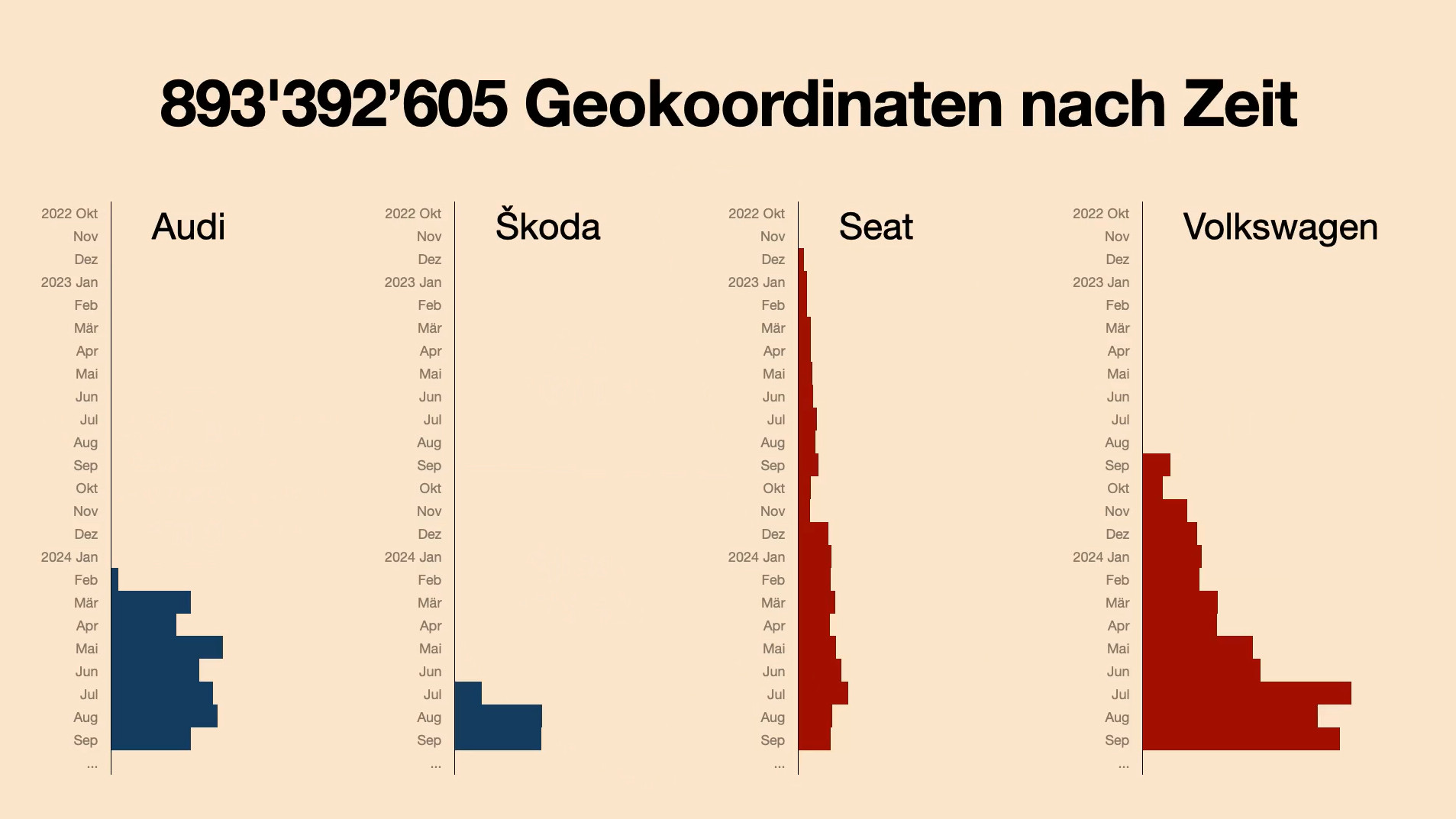

wchr@mastodon.socialVolkswagen left an unprotected database with up to two years of sensitive personal data on 800k networked VW, Seat, Audi and Skoda cars accessible online, including names, user IDs, sensor and geolocation data.

CCC talk by @fluepke and @michaelkreil (in German):

https://streaming.media.ccc.de/38c3/relive/598

Spiegel article:

https://www.spiegel.de/netzwelt/web/volkswagen-konzern-datenleck-wir-wissen-wo-dein-auto-steht-a-e12d33d0-97bc-493c-96d1-aa5892861027

Toni Aittoniemi

gimulnautti@mastodon.greenIf you somehow missed it, this is a political ad of the German party the richest man in the world has thrown his weight behind.

If you don’t see the roman salutes in the picture, you are part of the problem and not the solution.

lucie lukas "minute" hartmann

mntmn@mastodon.socialwe just stopped at a restaurant near the autobahn to hamburg to eat something and say that the MNT Reform Next campaign (it's our new 26mm thick 13" open hardware laptop) just went live aaaaa! https://www.crowdsupply.com/mnt/mnt-reform-next

evacide

evacide@hachyderm.ioI have had engineers tell me with a straight face "maps are not political." My dude, there is NOTHING MORE POLITICAL THAN MAPS.

Taggart

mttaggart@infosec.exchangeBoost if you want less generative AI in your tech in 2025.

Lynnesbian

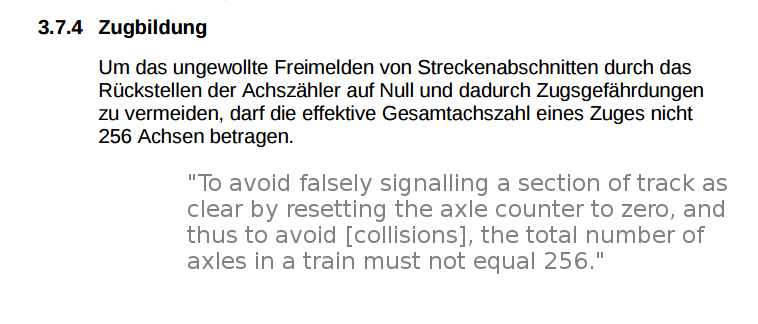

lynnesbian@fedi.lynnesbian.space

in switzerland you aren't allowed to have a train with exactly 256 axles because of an integer overflow in the axle counting machine

i wish i could fix my software bugs by making it illegal to cause them

A.R. Moxon, Verified Duck 🦆

JuliusGoat@mastodon.socialI wrote about the reaction to the shooting of a CEO.

"It seems to me that when you create a world where human life has been made as cheap as possible, you will eventually find you live in a world where your human life is deemed by others to be cheap, too."

Jan Wildeboer 😷

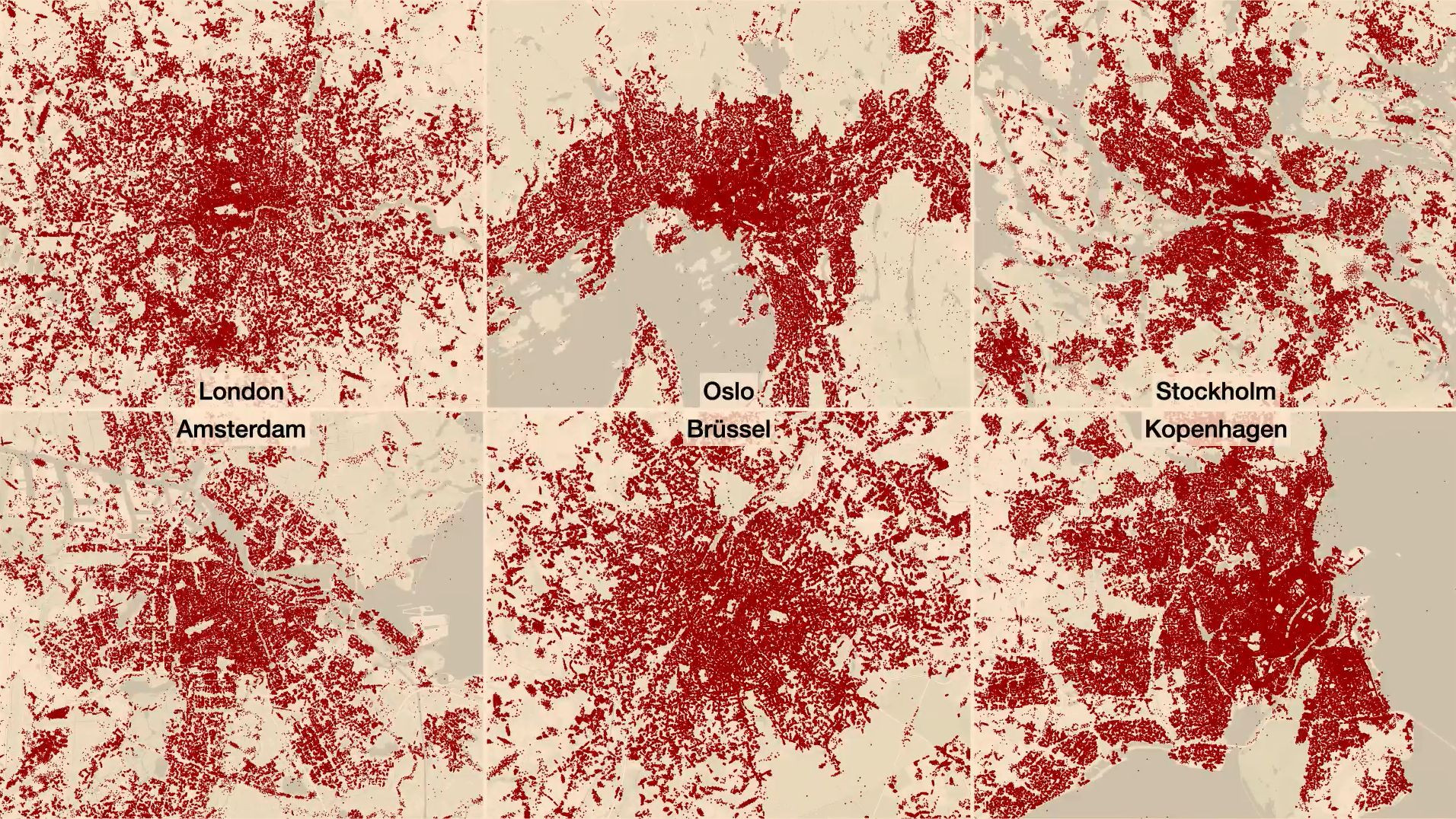

jwildeboer@social.wildeboer.net

It isn't that far-right has suddenly become a big movement in Europe. The far-right support has always been and will always be around 20%. The task of society is to keep that support in the "morally unacceptable" box that is buried deep in the ground. Clickbait, polarisation supported by technofascism and Putins money and methods for destabilisation in democracies however are the most powerful attack against that equilibrium I have ever experienced. And we are losing.

✧✦Catherine✦✧

whitequark@mastodon.socialare you a programmer? do you like heavy metal? would you like to be *really upset* by a music video?

do i have something for you.

software engineering methodologies are simple, there's really only two goals

the first goal of a methodology is to make workers replaceable, and this is usually achieved by removing any worker agency in the project

the second goal of a methodology is to insulate decision makers from risk and this is usually achieved by blaming the workers for failure—for not hitting estimates forced upon them

unfortunately, "delivering software people want" doesn't make the cut

Mathy Vanhoef

vanhoefm@infosec.exchangeWow, an adversary first compromised a neighbor of the target, and then attacked the target over Wi-Fi (with stolen password).

This is the first observed case of the #AntennaForHire attack that AirEye hypothesized.

Any Wi-Fi attack is now a remote attack!

David Chisnall (*Now with 50% more sarcasm!*)

david_chisnall@infosec.exchangeWhen I was a PhD student, I attended a talk by the late Robin Milner where he said two things that have stuck with me.

The first, I repeat quite often. He argued that credit for an invention did not belong to the first person to invent something but to the first person to explain it well enough that no one needed to invent it again. His first historical example was Leibniz publishing calculus and then Newton claiming he invented it first: it didn’t matter if he did or not, he failed to explain it to anyone and so the fact that Leibniz needed to independently invent it was Newton’s failure.

The second thing, which is a lot more relevant now than at the time, was that AI should stand for Augmented Intelligence not Artificial Intelligence if you want to build things that are actually useful. Striving to replace human intelligence is not a useful pursuit because there is an abundant supply of humans and you can improve the supply of intelligent humans by removing food poverty, improving access to education, and eliminating other barriers that prevent vast numbers of intelligent humans from being able to devote time to using their intelligence. The valuable tools are ones that do things humans are bad at. Pocket calculators changed the world because being able to add ten-digit numbers together orders of magnitude faster allowed humans to use their intelligence for things that were not the tedious, repetitive, tasks (and get higher accuracy for those tasks). If you want to change the world, build tools that allow humans to do more by offloading things humans are bad at and allowing them to spend more time on things humans are good at.

Toke Høiland-Jørgensen

tokeDet billige crimp tool virker fint. Bare dobbeltcheck at alle de små kobberpinde er trykket godt ned i de små ledninger når du er færdig (det kan man se med det blotte øje når det er samlet).

Mht kabel vil jeg anbefale at købe Cat6a eller Cat7 så du har mulighed for at opgradere til 10Gbit på et tidspunkt. De er lidt dyrere, men det er småting ift hvor besværligt det er at trække dem. Uanset om du tror du får brug for det i dag 😅

Quentin Monnet

qeole@hachyderm.ioRaphael Mimoun רפאל מימון

raph@social.coopJournalist: Do you believe that Israel has a right to exist?

UN Special Rapporteur on the Occupied Palestinian Territories Francesca Albanese: