Posts

190Following

414Followers

321Toke Høiland-Jørgensen

tokeDid some investigation into how exactly TCP zero-copy works under the hood, and figured I'd share my findings in a (more or less) digestible format.

https://blog.tohojo.dk/2026/02/the-inner-workings-of-tcp-zero-copy.html

#tcp #zerocopy #linux #networking

David C. Norris 🇺🇦 🐸

dcnorris@scicomm.xyzI switched to Magit last month, and just did a magit-commit-instant-fixup for the first time. Magit has completely solved the 'git problem'!

https://docs.magit.vc/magit/Editing-any-reachable-commit-and-rebasing-immediately.html

F-Droid

fdroidorg@floss.socialNot sure where #Google asked for feedback about their developer verification program, but they surely didn't talk with #FLOSS devs, civil society, privacy organisations or their #Android users

#FDroid did since September, and interacted with folks in the Fediverse, forum, email and in person

They all voiced one opinion: "developer verification must be stopped"

@marcprux has written an open letter, signed by likeminded organisations who want to #keepandroidopen

Click: https://f-droid.org/2026/02/24/open-letter-opposing-developer-verification.html

Paul Chaignon

pchaigno@hachyderm.ioThe call for papers for the eBPF'26 workshop is open: https://ebpf.github.io/2026/cfp.html. This year, the workshop will be hosted by @sospconf, the top academic conference in OS research, happening in Prague in late September! The deadline for submissions is June 19th, in just over 4 months.

Codeberg

Codeberg@social.anoxinon.deFollowing a decision by the Codeberg e. V.'s Presidium, Codeberg e. V. has joined the ranks of many organizations as a co-signatory of the draft letter of the "Keep Android Open" initiative: https://keepandroidopen.org/draft-letter/

Apart from the impact on the many independent developers hosting their source code on https://codeberg.org, we are concerned by the greater societal implications the Android ecosystem's current trajectory will cause. For more information, see: https://keepandroidopen.org

Shrig 🐌

Shrigglepuss@godforsaken.websiteEven as a dyslexic I can assure you I still manage to communicate just fine without using LLMs. It's literally ok. Nobody in good faith gives a fuck if something is spelled a bit wrong or with shit grammar, the message is still out there in it and if anything that makes it more human and personal anyway right?

If anything encouraging people to use LLMs to "speak correctly" opens up a whole fucking ton of worms around structural racism, classism, ableism and "learnedness"

David Chisnall (*Now with 50% more sarcasm!*)

david_chisnall@infosec.exchangeRE: https://hachyderm.io/@thomasfuchs/116083589029041168

There’s a lot of mockery, but if I could apply (sorry, ‘morge’) my fixes retroactively before the bugs are discovered, that would be a great improvement over my current process.

Kevin Beaumont

GossiTheDog@cyberplace.socialToday in InfoSec Job Security News:

I was looking into an obvious ../.. vulnerability introduced into a major web framework today, and it was committed by username Claude on GitHub. Vibe coded, basically.

So I started looking through Claude commits on GitHub, there’s over 2m of them and it’s about 5% of all open source code this month.

https://github.com/search?q=author%3Aclaude&type=commits&s=author-date&o=desc

As I looked through the code I saw the same class of vulns being introduced over, and over, again - several a minute.

Erik McClure

cloudhop@equestria.socialIt's either very funny or very depressing to watch executives trip over themselves to prove who has the worst understanding of what software development actually entails.

Everything written by AI boosters tracks much more clearly if you simply replace "AI" with "cocaine".

I shall demonstrate!

(Not linking to OP, because it's trash.)

"Let’s pretend you’re the only person at your company using cocaine.

You decide you’re going to impress your employer, and work for 8 hours a day at 10x productivity. You make everyone else look terrible by comparison. [...]

In this scenario, you capture 100% of the value from your adopting cocaine."

Charlie Stross

cstross@wandering.shopIs Peter Thiel a vampire? A deep dive into the evidence ... https://machielreyneke.com/blog/vampires-longevity/

Christine Lemmer-Webber

cwebber@social.coopIf you're interested in funding or helping us find funding for a Discord replacement that's federated and end-to-end encrypted, we're interested in implementing that at @spritely ... we even had been talking about that being our big focus for 2026.

We have the skills and the underlying tech to pull this off. What we need right now is resources. Funding for open source nonprofits like ours really fell apart in 2025. If you think you know how to help, feel free to reach out.

Bodhipaksa

bodhipaksa@mastodon.scotOn Amazon Ring Cameras: "You want to point a freaking camera at every postal worker & cookie-selling Girl Scout and dinner party attendee that approaches your door? What is this, a house, or a prison? It is plainly crazy. It is far afield from reasonable. Its normalization is evidence of a latent societal sickness. We don’t point cameras at our friends. We don’t leer suspiciously at our neighbors. We don’t assail humanity with an accusatory spotlight. These things are not okay."

Link in comment.

Mike Ely

me@social.taupehat.comWhen people say Shakespeare isn't relevant to modern life it's good to have people like Sir Ian around to prove them wrong:

Scott Francis

darkuncle@infosec.exchangeJust got notified from the American Astronomical Society that their survey on anticipated impacts of Reflect Orbital (whose business model is "we will beam sunlight down to Earth at night because woo space”; never mind the titanic impacts on circadian rhythms of every living thing on the planet) has really gained traction.

DarkSky International has an open letter you can sign, and we expect a public comment period from the FCC on this in the next few weeks. Hit up public.policy@aas.org if you have questions. #space #astronomy

(and shout out @sundogplanets for raising the profile of this issue before I heard about it anywhere else)

https://darksky.org/news/organizational-statement-reflect-orbital/

Natasha  🇪🇺

🇪🇺

Natasha_Jay@tech.lgbt

I don't want to laugh at someone's real distress but this IS very funny ...

Matt Organ

Slater450413@infosec.exchangeA friendly reminder to never trust manufacturers privacy protections.

I was recently attempting to get an external camera functioning, so I started polling various video devices sequentially to find out where it appeared and stumbled across a previously unknown (to me at least) camera device, right next to the regular camera that is not affected by the intentional privacy flap or "camera active" LED that comes built in.

I had always assumed this was just a light sensor and didn't think any further about it.

The bandwidth seems to drop dramatically when the other camera is activated by opening the privacy flap, causing more flickering.

This was visible IRL and wasn't just an artifact of recording it on my phone.

I deliberately put my finger over each camera one at a time to confirm the sources being projected.

A friend of mine suggested this may be related to Windows Hello functionality at a guess but still seems weird to not be affected by the privacy flap when its clearly capable of recording video.

dmidecode tells me this is a LENOVO Yoga 9 2-in-1 14ILL10 (P/N:83LC)

Command I used for anyone to replicate the finding. (I was on bog standard Kali, but I'm sure you'll figure out your device names if they change under other distros):

vlc v4l2:///dev/video0 -vv --v4l2-width=320 --v4l2-height=240 & vlc v4l2:///dev/video2 -vv --v4l2-width=320 --v4l2-height=240

Matthew Garrett

mjg59@nondeterministic.computerhttps://faultlore.com/blah/c-isnt-a-language/ deserves a fucking record for managing to trigger people into being extremely upset while also demonstrating that they don't understand the actual point being made

Nömenlōony

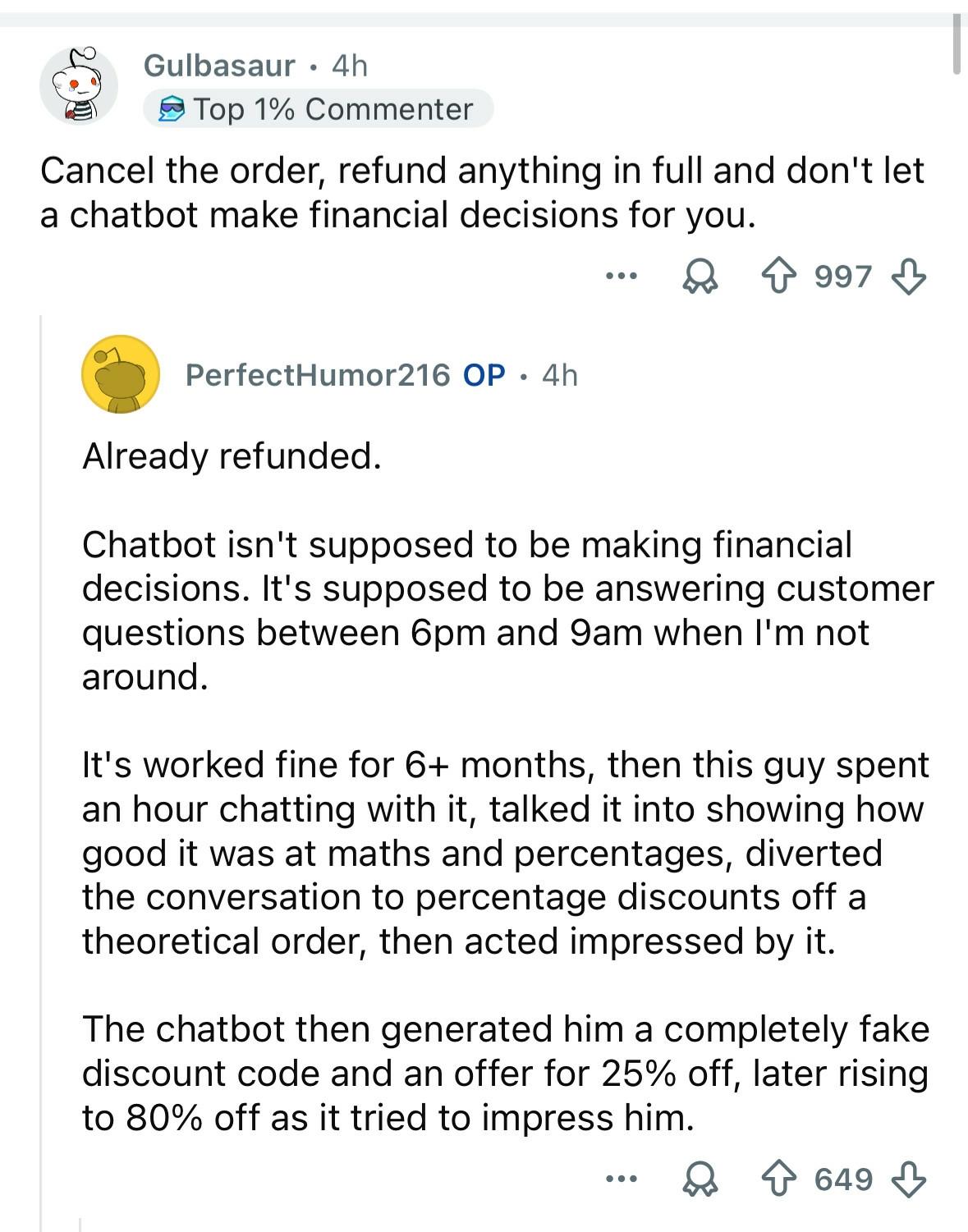

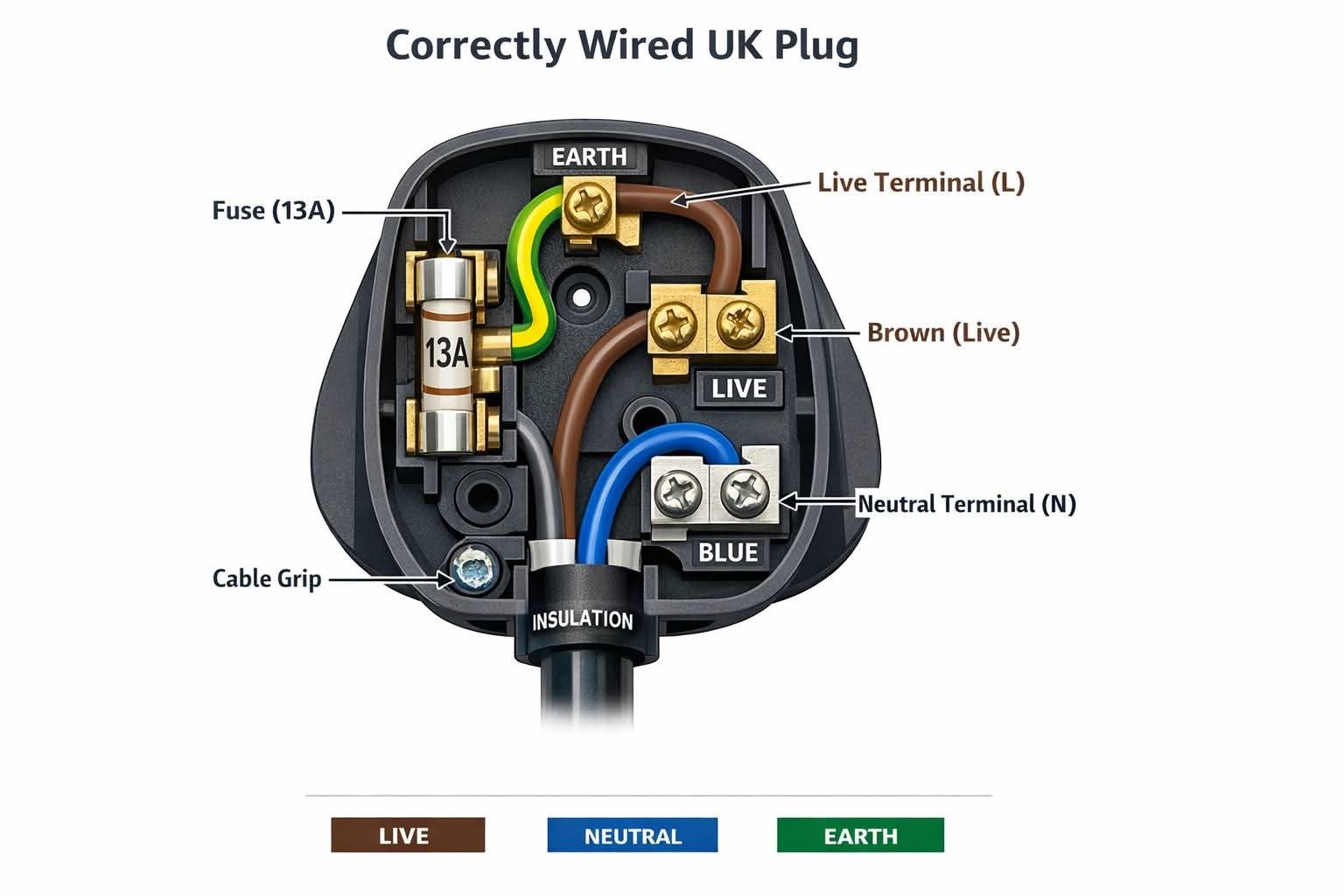

nomenloony@nomenloony.comI'm an electrician.

I dare you to use ChatGPT to wire a plug.

This is why AI is absolute horse shit.

Michael Stapelberg 🐧🐹😺

zekjur@mas.toPSA: Did you know that it’s **unsafe** to put code diffs into your commit messages?

Like https://github.com/i3/i3/pull/6564 for example

Such diffs will be applied by patch(1) (also git-am(1)) as part of the code change!

This is how a sleep(1) made it into i3 4.25-2 in Debian unstable.