Conversation

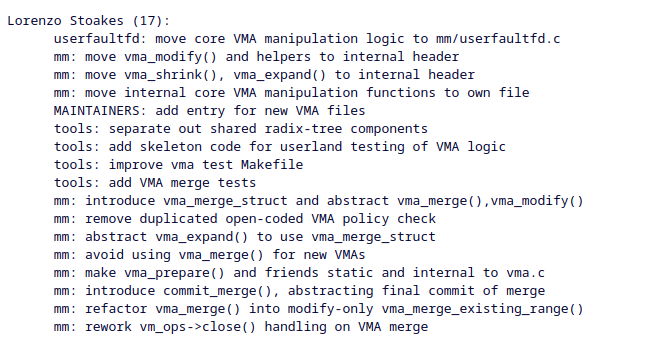

6.12 mm patches merged, I landed 17 patches implementing 2 major patch sets:

1. Completely isolating core vma operations and restructuring them so they can be built in userland, adding a ton of stubs (rather painful to do...) such that this becomes possible.

2. Eliminating vma_merge(), a horrid confusing mess of a function that has been around since forever and confusing people (including myself) forever, and completely rewriting how VMA merging works, eliminating at least one unnecessary tree walk and greatly clarifying how merges function as well as using the work done in (1) to add a series of unit tests.

Upcoming in 6.13 is my work on guard pages that I talked about at LPC and surrounding patches, and possibly/probably some more VMA stuff... and maybe... something else?

There is a more long term large series I'd like to work on around the LRU but maybe something to talk about once I have something concrete...

1. Completely isolating core vma operations and restructuring them so they can be built in userland, adding a ton of stubs (rather painful to do...) such that this becomes possible.

2. Eliminating vma_merge(), a horrid confusing mess of a function that has been around since forever and confusing people (including myself) forever, and completely rewriting how VMA merging works, eliminating at least one unnecessary tree walk and greatly clarifying how merges function as well as using the work done in (1) to add a series of unit tests.

Upcoming in 6.13 is my work on guard pages that I talked about at LPC and surrounding patches, and possibly/probably some more VMA stuff... and maybe... something else?

There is a more long term large series I'd like to work on around the LRU but maybe something to talk about once I have something concrete...

Jarkko Sakkinen

jarkko

@ljs OK then it is still existing performance issue in the SGX driver. Sean (Christopherson) removed the close callback when I was working with him on the driver to enable merges but to this day it is using PFNMAP for mapping.

So once the program is running for some time inside enclave the memory map might look like something like:

https://lore.kernel.org/linux-sgx/c148ab48-1b70-6e20-5742-b0b2dc014dc3@intel.com/

It's a corner case where the current software abstraction does not map properly the physical reality (given that for enclave memory merge would be fine even tho we need to access it with PFNMAP).

So once the program is running for some time inside enclave the memory map might look like something like:

https://lore.kernel.org/linux-sgx/c148ab48-1b70-6e20-5742-b0b2dc014dc3@intel.com/

It's a corner case where the current software abstraction does not map properly the physical reality (given that for enclave memory merge would be fine even tho we need to access it with PFNMAP).

Jarkko Sakkinen

jarkko

Edited 1 year ago

@ljs I think I worked this around in run time by closing old mmap and creating a completely new one, i.e. I literally implemented VMA merge in user space to workaround the kernel issue in https://github.com/enarx/enarx.

I even maintained my own database of memory spans with access rights etc because kernel does not merge properly :-) You cannot implement merge without having full picture of the mapped spans and their access rights. I.e. I merge in my database and then turn that into mmap() calls.

I even maintained my own database of memory spans with access rights etc because kernel does not merge properly :-) You cannot implement merge without having full picture of the mapped spans and their access rights. I.e. I merge in my database and then turn that into mmap() calls.

Jarkko Sakkinen

jarkko

@ljs If there would be something that kernel could provide some day to enable merges in such corner that'd be great but it is not a total show stopper issue. We get shit work without kernel doing merges too by redoing it in user space and it is not that hard. But anyway something to think about ;-)

Jarkko Sakkinen

jarkko

@ljs Not expecting you to fix this but this like the how the problem is scoped:

1. You have special memory without backing page structures.

2. The special memory can host executables executed by the CPU.

With those constraints there's no really other way I'm aware of than PFNMAP. Or perhaps there is better way to map the memory that I'm not just aware of? Have not really looked into the issue since 2022 :-)

1. You have special memory without backing page structures.

2. The special memory can host executables executed by the CPU.

With those constraints there's no really other way I'm aware of than PFNMAP. Or perhaps there is better way to map the memory that I'm not just aware of? Have not really looked into the issue since 2022 :-)

@jarkko

you can never merge pfn map as it's by definition spanning a range that has mappings distinct from any metadata so we have absolutely no sensible way of knowing how to merge.

I suppose you could literally read page tables and check but that's sketchy, racey, and finnicky, and would cause major latency so don't think there's any way that would make sense.

I'd say whatever's setting up the mappings should try to avoid setting up multiple ones if it's really hurting you that they don't merge.

But given these are the minority of mappings it's hard to imagine merge really is a problem there.

There are probably other reasons why we'd prefer not to, but it's sunday bro.

TL;DR at a glance this looks like a problem in the driver not mm.

you can never merge pfn map as it's by definition spanning a range that has mappings distinct from any metadata so we have absolutely no sensible way of knowing how to merge.

I suppose you could literally read page tables and check but that's sketchy, racey, and finnicky, and would cause major latency so don't think there's any way that would make sense.

I'd say whatever's setting up the mappings should try to avoid setting up multiple ones if it's really hurting you that they don't merge.

But given these are the minority of mappings it's hard to imagine merge really is a problem there.

There are probably other reasons why we'd prefer not to, but it's sunday bro.

TL;DR at a glance this looks like a problem in the driver not mm.

Jarkko Sakkinen

jarkko

Edited 1 year ago

@ljs I'd actually claim that the driver is as good as it gets in this area. It has been turned over many times by me, Sean and Dave Hansen. The way things are done is also result of 2,5 years upstreaming period so it is pretty well screened code base, and I can say by large audience of the senior maintainers. https://www.phoronix.com/news/Intel-SGX-Linux-5.11

But It's Sunday and workaround of doing your own fake mm is not as bad as it sounds. You have code that basically draws a picture of how VMA layout should look like and then does optimal set of mmaps based on that :-) Once it is in run-time nobody cares.

I'm just merely interested when something related to merges comes up "could we still improve".

I.e. I merge by:

1. Close all VMA's.

2. Map new VMA's.

The shim tracks every mmap(), mprotected() etc. syscall and updates the database accordingly.

But It's Sunday and workaround of doing your own fake mm is not as bad as it sounds. You have code that basically draws a picture of how VMA layout should look like and then does optimal set of mmaps based on that :-) Once it is in run-time nobody cares.

I'm just merely interested when something related to merges comes up "could we still improve".

I.e. I merge by:

1. Close all VMA's.

2. Map new VMA's.

The shim tracks every mmap(), mprotected() etc. syscall and updates the database accordingly.

Jarkko Sakkinen

jarkko

Edited 1 year ago

@ljs I guess it could be perhaps treated separately in "E820" (or today EFI provided) code and mark it with something else than PFNMAP. But it also depends on direct PFN manipulation in order to evict pages between regular and enclave memory (PRMRR registers preserve fixed amount of memory for SGX use).

I guess with SGX we can conclude that mm subsystem does not fully scale to that task but since it is only of its kind how it uses memory I neither expect it to 🤷 And really cannot put any blame because I have no suggestions how to exactly fix mm.

I mean if there was two subsystems then I could imagine something like VM_PFNMAP_MERGEABLE or similar VMA type: SGX memory areas as far as merging VMA's go work 1:1 as regular memory areas. It is the access to them that differs.

I guess with SGX we can conclude that mm subsystem does not fully scale to that task but since it is only of its kind how it uses memory I neither expect it to 🤷 And really cannot put any blame because I have no suggestions how to exactly fix mm.

I mean if there was two subsystems then I could imagine something like VM_PFNMAP_MERGEABLE or similar VMA type: SGX memory areas as far as merging VMA's go work 1:1 as regular memory areas. It is the access to them that differs.

@jarkko I see you've taken the 'it's Sunday' thing seriously, especially 'it's Sunday after a conference you spoke at' honestly bro come on.

It's not a lack of scalability, I explained why we CANNOT MERGE these. We're not 'failing to scale', your driver is choosing to do something that does not 'scale'.

To repeat - the PFN mappings can map any set of PFNs to the VMA virtual range (see remap_pfn_range_notrack()) - so on what basis could we merge adjacent VM_PFNMAP VMAs?

A VMA is a virtually contiguous memory range with the same attributes - but if we merged these (without walking page tables) this would no longer be the case. This would be 'somewhat' surprising for anybody who mapped distinct PFN ranges in adjacent mappings.

Keep in mind the prot bits of the mapping can vary as well as PFN ranges.

Walking the page tables and actually checking this might be possible, but it'd cause huge latency when you map any adjacent or overlapping VMAs. Encoding the data in the VMA would be fraught and fragile and then there's concerns about locking and races here...

To me that latency thing is what kills it.

Your driver seems to be mapping a bunch of stuff immediately adjacent to each other and presumably you are implying with equal prot and immediately adjacent PFNs? I mean if not, then you SHOULD NOT merge anyway.

The .close() thing is obviously bogus when you're dealing with special VMAs though semantics around .close() handling has changed with my series (in practical pretty much identical behaviour after Vlasta's optimisation on this).

Have you actually hit some kind of issue as a result of this? What 'lack of scalability' is the issue here?

It's not something I'm going to dive into, because I don't believe there's an issue here, and you're bugging me on a Sunday about it, which is making me grumpy now.

It's not a lack of scalability, I explained why we CANNOT MERGE these. We're not 'failing to scale', your driver is choosing to do something that does not 'scale'.

To repeat - the PFN mappings can map any set of PFNs to the VMA virtual range (see remap_pfn_range_notrack()) - so on what basis could we merge adjacent VM_PFNMAP VMAs?

A VMA is a virtually contiguous memory range with the same attributes - but if we merged these (without walking page tables) this would no longer be the case. This would be 'somewhat' surprising for anybody who mapped distinct PFN ranges in adjacent mappings.

Keep in mind the prot bits of the mapping can vary as well as PFN ranges.

Walking the page tables and actually checking this might be possible, but it'd cause huge latency when you map any adjacent or overlapping VMAs. Encoding the data in the VMA would be fraught and fragile and then there's concerns about locking and races here...

To me that latency thing is what kills it.

Your driver seems to be mapping a bunch of stuff immediately adjacent to each other and presumably you are implying with equal prot and immediately adjacent PFNs? I mean if not, then you SHOULD NOT merge anyway.

The .close() thing is obviously bogus when you're dealing with special VMAs though semantics around .close() handling has changed with my series (in practical pretty much identical behaviour after Vlasta's optimisation on this).

Have you actually hit some kind of issue as a result of this? What 'lack of scalability' is the issue here?

It's not something I'm going to dive into, because I don't believe there's an issue here, and you're bugging me on a Sunday about it, which is making me grumpy now.

Jarkko Sakkinen

jarkko

@ljs Yeah, you know I fully get it :-) While doing SGX I really turned all rocks in the area of merging VMA's ,PAT etc. And I had Sean, Dave and Borislav helping me out while doing that.

I don't have better vision how to make SGX merging work without user space help so not blaming you guys really either.

If I did blame the implementation, the patches would be out there for review already... And we were able to nail virtualization, NUMA and cgroups for SGX and people do use it so it's exactly just a manageable glitch. World is not perfect :-)

I don't have better vision how to make SGX merging work without user space help so not blaming you guys really either.

If I did blame the implementation, the patches would be out there for review already... And we were able to nail virtualization, NUMA and cgroups for SGX and people do use it so it's exactly just a manageable glitch. World is not perfect :-)

Jarkko Sakkinen

jarkko

@ljs Well as long as VMA setup stays static it's not a big of a deal. And I get the point. It just happens SGX ranges are always managed from in VMA level as they were regular memory but obviously core mm cannot trust on that even if that was the case.

I just check periodically if we could improve something in this area that's all.

I just check periodically if we could improve something in this area that's all.

m_on_stair

m@miruku.cafe@ljs@social.kernel.org hey could you please direct me to a resource which talks about what a guard page is and how its implemented?

im trying to learn more about it but cant find anything

@m it's a bit of an overloaded term, and is used to reference a bunch of different things.

The general concept is that you configure a mapping such that, when touched, a page fault occurs.

That's really useful as it means the hardware (i.e. the MMU) enforces that you can't touch the region.

You can do it in userspace with an mmap() call like, for example:

ptr = mmap(NULL, 11 * page_size, PROT_READ | PROT_WRITE, MAP_ANON | MAP_PRIVATE, -1, 0);

Which sets up an 11 page memory region, then

mprotect(&ptr[10 * page_size], page_size, PROT_NONE);

To mark the last page as inaccessible (PROT_NONE means neither read nor write access).

Then, if somebody overruns a buffer from ptr, they'll hit the page and the process will get a fatal signal.

Libraries like userland allocators and pthread implementations do things like this so if you allocate regions of memory to multiple thread (which share the same address space), one overrunning a buffer doesn't cause memory corruption for another.

The general concept is that you configure a mapping such that, when touched, a page fault occurs.

That's really useful as it means the hardware (i.e. the MMU) enforces that you can't touch the region.

You can do it in userspace with an mmap() call like, for example:

ptr = mmap(NULL, 11 * page_size, PROT_READ | PROT_WRITE, MAP_ANON | MAP_PRIVATE, -1, 0);

Which sets up an 11 page memory region, then

mprotect(&ptr[10 * page_size], page_size, PROT_NONE);

To mark the last page as inaccessible (PROT_NONE means neither read nor write access).

Then, if somebody overruns a buffer from ptr, they'll hit the page and the process will get a fatal signal.

Libraries like userland allocators and pthread implementations do things like this so if you allocate regions of memory to multiple thread (which share the same address space), one overrunning a buffer doesn't cause memory corruption for another.

Jarkko Sakkinen

jarkko

@ljs That said, obviously core mm would better suit to any "random intel feature" as long as it has a user base :-) it is as random or not random feature as e.g. oracle database. Or any other random user for that matter. I think all three are equivalent.

I get your point tho, mm cannot be tuned to SGX at expense of Oracle DB, some random user or any other work load. Still I thought that it is good to a biennial query at LKML if there is something new in mm that could help to improve this part of the driver or something not too intrusive that could be changed in mm :-) I think this somewhat fair standing point.

I get your point tho, mm cannot be tuned to SGX at expense of Oracle DB, some random user or any other work load. Still I thought that it is good to a biennial query at LKML if there is something new in mm that could help to improve this part of the driver or something not too intrusive that could be changed in mm :-) I think this somewhat fair standing point.