Kernel Recipes

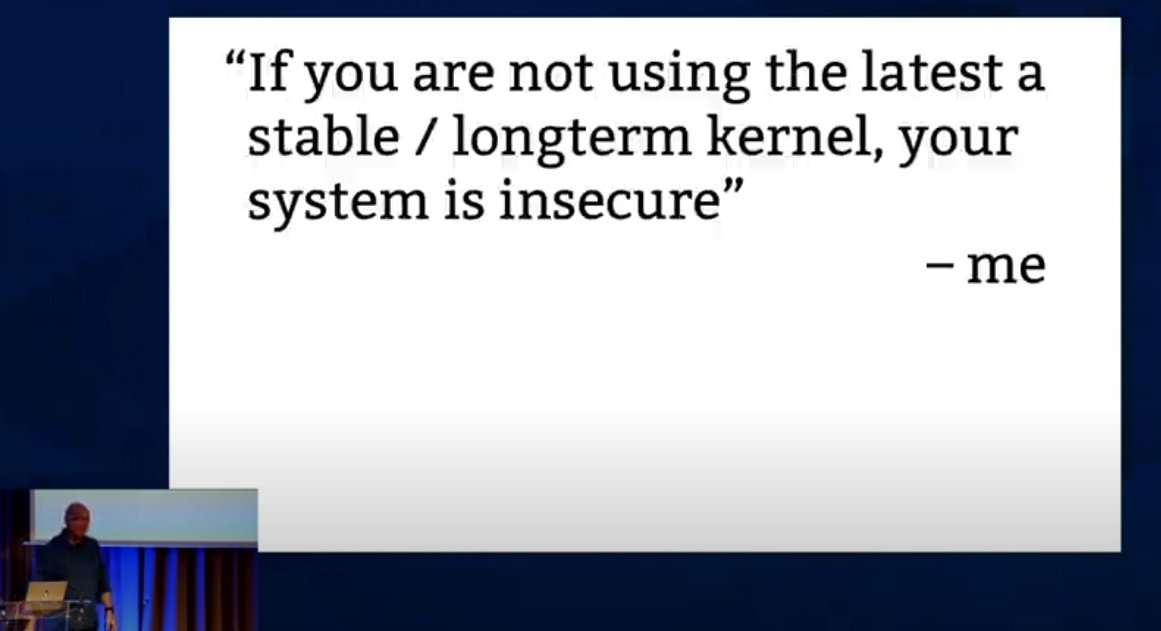

KernelRecipes@fosstodon.orgKeep it in mind! First one is a kind of personal mantra #kr2024

Kees Cook (old account)

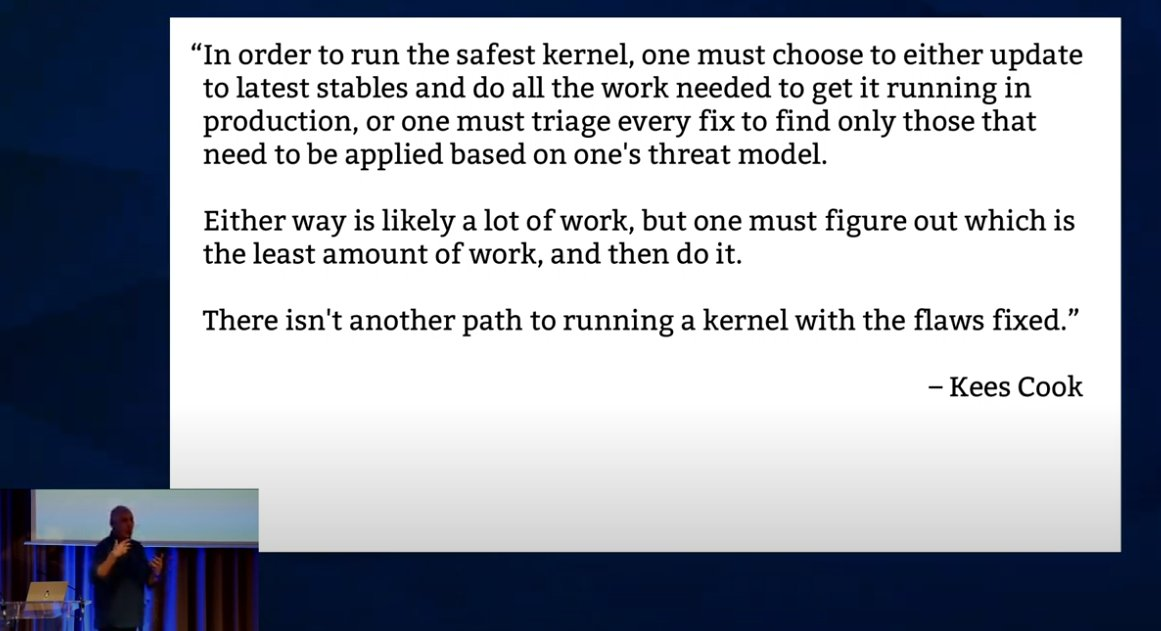

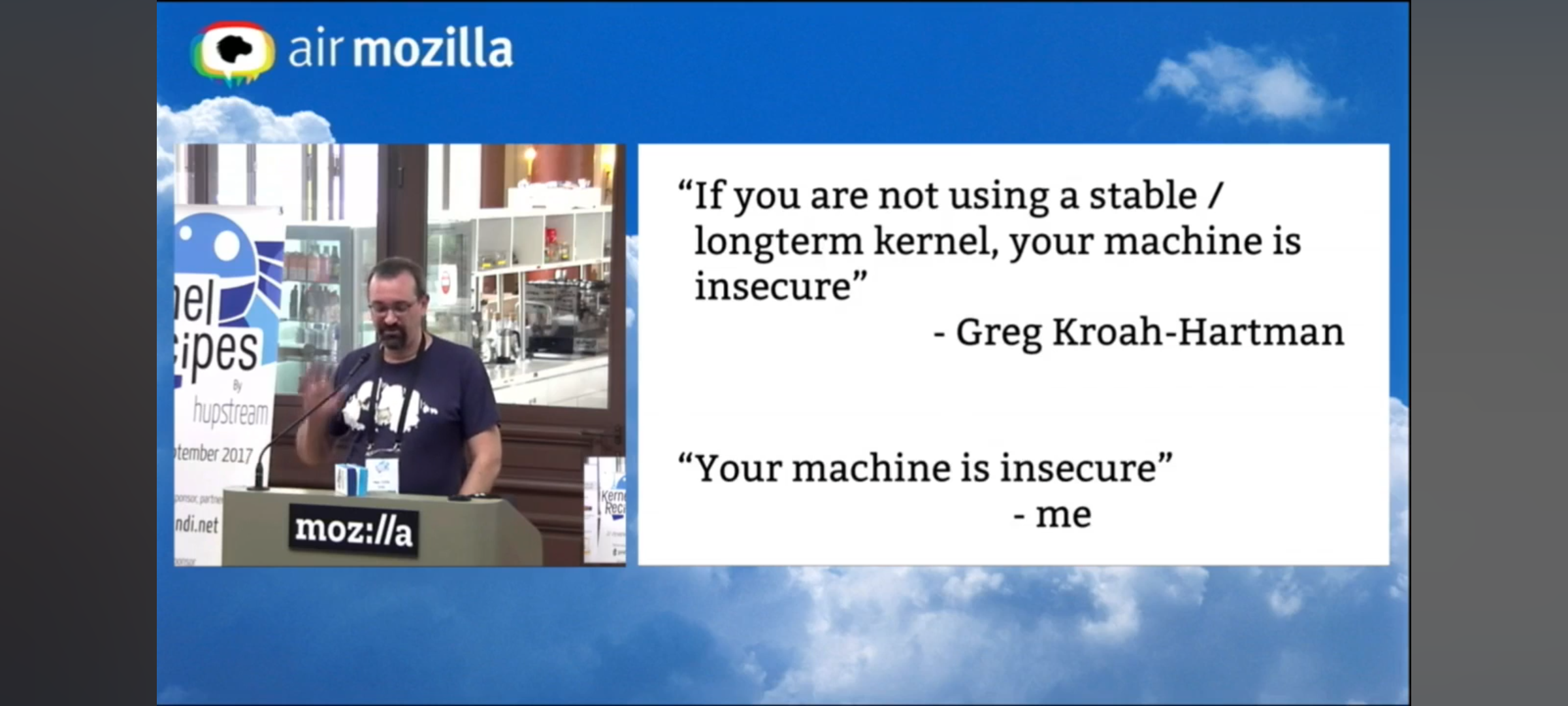

kees@fosstodon.org@KernelRecipes Followed up by my more nihilistic take:

https://youtu.be/b2_HAH2kX04#t=373

"Your machine is insecure."

Bottom line remains the same: we have to eliminate bug classes. I'm really excited by all the work that continues on this front between fixing the C language itself and the adoption of Rust. We continue to make steady progress, but can always use more help. :)

Kees Cook (old account)

kees@fosstodon.org@KernelRecipes Sometimes people need reminding that CVEs are just a stand-in for the real goal: fixing vulnerabilities. The point of "the deployment cannot have any CVEs" isn't an arbitrary check list. The goal is to get as close as possible to "the deployment cannot have any vulnerabilities".

The Linux Kernel CNA solves the "tons of false negatives" problem (but creates the "a few false positives" problem), but the result is a more accurate mapping from vulnerabilities to CVEs.

Kees Cook (old account)

kees@fosstodon.org@KernelRecipes So the conclusion from this is that anyone saying "we can't keep up with all the CVEs" is admitting that they can't keep up with all the current (and past!) vulnerabilities present in the kernel.

Either they don't have a threat model, can't triage patches against their threat model, or can't keep up with stable releases due to whatever deployment testing gaps they have.

There are very few deployments I'm aware that can, honestly. This is hardly new, but now it is more visible.

Kees Cook (old account)

kees@fosstodon.org@KernelRecipes But this is why I've been saying for more than a decade, and others have said for way longer, that the solution is eliminating classes of flaws.

Bailing water out of the boat is Sisyphean without also patching the holes in the hull. But since we're already in the water, we have to do both. And the more we can fix the cause of the flaws the less bailing we need to do; so more Rust, safer C.

I look forward to finding design issue vulns instead of the flood of memory safety issues.

Pavel Machek

pavelPavel Machek

pavelPavel Machek

pavelThomas Depierre

Di4na@hachyderm.io@kurtseifried @kees @KernelRecipes i mean that or... We can also reclassify the problem from "a bug/programming mistake" to "being forced to use an unergonomic tool".

I refuse to let a tablesaw without sawstop technology in my workshop. Why should we not analyze our tools the same way?

Thomas Depierre

Di4na@hachyderm.io@kurtseifried @kees @KernelRecipes sure but we are not talking of that. We are not even at the "wait, the tools themselves could be dangerous" step

Thomas Depierre

Di4na@hachyderm.io@kurtseifried @kees @KernelRecipes also note that the use case for planners and wood chisels have been massively reduced in modern "industrial" wood working. By changing the kind of joints we do, and the materials we use, and the target end products

Jacen

jacen@tech.lgbt@kees @KernelRecipes

I think many people agree, the difficulty is to get there: it isn't really realistic to freeze C code while replacing it with Rust, which means the bindings will keep breaking while Rust code is developed, causing a lot of pain to whoever maintains the bindings (making subsystem maintainers say "not me")

This, as far as I can see on the mailing list I follow, creates some tension, and I predict this will continue to do so in the coming years, because there is no simple way to convert such a large active codebase with so many users

Kevin Brosnan

kbrosnan@mastodon.socialAs an interested outsider the state of Linux CI is bizarre. It appears largely left to consumers and developers.

When the cycle time for feedback on the commit is days or weeks the dev for the commit has moved on to new work. It is difficult to get that person to care about your problem.

I see the KernelCI project however it is not clear if build failures on it are cause for a backout in for the commit.

Jacen

jacen@tech.lgbt@kbrosnan @kees @KernelRecipes

I think you are right: Linux CI is left to consumer and developers. The thing is, there isn't really anyone else. There is no enterprise owning an hosting the kernel. I think only 2 developers are paid by the Linux Foundation, with no infrastructure to do CI on every subsystem tree.

On the media side, I see 2 CI running tests, one from Google, the other from Intel, with someone from Cisco in copy.

That being said, you should expect days before getting feedbacks on a commit you submit for review (by people not paid to do so) a reasonably weeks (or months, for a significat change, or 20 years for PREEMPT_RT) before getting merged in the subsystem.

The main issue with the kernel is to maintain it. So, I guess, for many maintainers, if you can't maintain your code, maybe it's best to leave it out of the kernel tree.

David Heidelberg

okias@floss.social@kbrosnan @jacen @kees @KernelRecipes The CI, while provides amazing value when setup properly, is expensive to run. Even on smaller projects as Mesa3D, it needs to have a team of maintainers to develop it and keep it running. Not mentioning the teams who cares about the physical farms. Same as people agree on one mainline, I think it would be wise for all involved companies to join #KernelCI and work on pre-merge solutions instead doing their own post-merge.