Posts

505Following

144Followers

173I like cycling, powerlifting, bad video games and metal.

Otherwise, I occupy my time with various bits in RISC-V land.

~useless, placeholder, website: https://www.conchuod.ie/

Conor Dooley

conor

Shame that the RISC-V and Kernel devrooms at FOSDEM tomorrow clash time wise.

I suppose it's convenient that most of the kernel room is about BPF/fs & meeting people is more beneficial than attending talks.

PWM talk and then try to get into the RISC-V room I guess...

I suppose it's convenient that most of the kernel room is about BPF/fs & meeting people is more beneficial than attending talks.

PWM talk and then try to get into the RISC-V room I guess...

Conor Dooley

conor

I like the healthy dose of scepticism about marketing claims that meeting people in person at conferences provides :)

Conor Dooley

conor

re: https://social.kernel.org/notice/AS3DZjXqKnHXtM8ZEG

Problem 1:

Nick fixed about a year ago, and to quote his commit message

```

In case the DTB provided by the bootloader/BootROM is before the kernel

image or outside /memory, we won't be able to access it through the

linear mapping, and get a segfault on setup_arch(). Currently OpenSBI

relocates DTB but that's not always the case (e.g. if FW_JUMP_FDT_ADDR

is not specified), and it's also not the most portable approach since

the default FW_JUMP_FDT_ADDR of the generic platform relocates the DTB

at a specific offset that may not be available. To avoid this situation

copy DTB so that it's visible through the linear mapping.

```

Problem 2:

I fixed a few months ago. The reserved memory scanning takes place too early

during init, before paging has been enabled. As a result, once paging *is*

enabled & the tlb flushed, trying to read the label names of reserved memory

regions causes a kernel panic. Apparently we were just the first ones to try

to write a remoteproc driver for RISC-V!

The solution was moving the call to early_init_fdt_scan_reserved_mem() after

the dtb was properly mapped.

Problem 3:

If the dtb is located outside of memory regions that the kernel is aware of, it

gets remapped into a region that the kernel *is* aware of.

However, this is done before we check for any reserved memories etc. If there

happened to be a section of unusable memory in the DT, that was then carved

out using a reserved memory region with a "no-map" property, it is entirely

possible that the DT would end up there.

This was introduced by Nick's fix for problem 1 & I've been avoiding it by just

putting the dtb somewhere that I know will be accessible to the kernel, and thus

avoiding the remapping.

Problem 4:

Introduced by my fix for problem 2. RISC-V uses the top-down method for looking

up memblocks for reserved memory regions. In a sparse configuration, if there

was to be an upper-most range, all of which was intended to be used as a DMA

region, memory allocated at some point in paging_init() would consume very small

sections of this upper DMA region. This can be observed on some configurations

on PolarFire SoC, and was easy to spot with memblock debug enabled.

These small allocations mean that when, in early_init_fdt_scan_reserved_mem(),

we look up the reserved memory node that is intended to consume the whole memory

region there is no longer enough space for this mapping.

This is worse if the memory region is non-coherent as the system will then

immediately crash.

Problem 4 can be fixed by using bottom-up searching for memblocks. This may also

fix problem 3 too, I just haven't tested it.

I'm super unsure as to whether switching the search order has some consequences

that I am just not aware of, but I probably won't be any the wiser until I send

a patch!

Problem 1:

Nick fixed about a year ago, and to quote his commit message

```

In case the DTB provided by the bootloader/BootROM is before the kernel

image or outside /memory, we won't be able to access it through the

linear mapping, and get a segfault on setup_arch(). Currently OpenSBI

relocates DTB but that's not always the case (e.g. if FW_JUMP_FDT_ADDR

is not specified), and it's also not the most portable approach since

the default FW_JUMP_FDT_ADDR of the generic platform relocates the DTB

at a specific offset that may not be available. To avoid this situation

copy DTB so that it's visible through the linear mapping.

```

Problem 2:

I fixed a few months ago. The reserved memory scanning takes place too early

during init, before paging has been enabled. As a result, once paging *is*

enabled & the tlb flushed, trying to read the label names of reserved memory

regions causes a kernel panic. Apparently we were just the first ones to try

to write a remoteproc driver for RISC-V!

The solution was moving the call to early_init_fdt_scan_reserved_mem() after

the dtb was properly mapped.

Problem 3:

If the dtb is located outside of memory regions that the kernel is aware of, it

gets remapped into a region that the kernel *is* aware of.

However, this is done before we check for any reserved memories etc. If there

happened to be a section of unusable memory in the DT, that was then carved

out using a reserved memory region with a "no-map" property, it is entirely

possible that the DT would end up there.

This was introduced by Nick's fix for problem 1 & I've been avoiding it by just

putting the dtb somewhere that I know will be accessible to the kernel, and thus

avoiding the remapping.

Problem 4:

Introduced by my fix for problem 2. RISC-V uses the top-down method for looking

up memblocks for reserved memory regions. In a sparse configuration, if there

was to be an upper-most range, all of which was intended to be used as a DMA

region, memory allocated at some point in paging_init() would consume very small

sections of this upper DMA region. This can be observed on some configurations

on PolarFire SoC, and was easy to spot with memblock debug enabled.

These small allocations mean that when, in early_init_fdt_scan_reserved_mem(),

we look up the reserved memory node that is intended to consume the whole memory

region there is no longer enough space for this mapping.

This is worse if the memory region is non-coherent as the system will then

immediately crash.

Problem 4 can be fixed by using bottom-up searching for memblocks. This may also

fix problem 3 too, I just haven't tested it.

I'm super unsure as to whether switching the search order has some consequences

that I am just not aware of, but I probably won't be any the wiser until I send

a patch!

Conor Dooley

conor

Found another reserved memory related problem in RISC-V's early init code today. This one appears to actually have been caused by my last fix, but that makes for 4 problems in the same but of code.

I have a fix for it, but it's either adding yet another band-aid, introducing a behaviour change or trying to find the time to do significant overhaul and hope not to make it worse!

I have a fix for it, but it's either adding yet another band-aid, introducing a behaviour change or trying to find the time to do significant overhaul and hope not to make it worse!

Conor Dooley

conor

https://www.devicetree.org/

Devicetree devicetree DeviceTree

How am I suppose to know when the organisation itself doesn't!

Devicetree devicetree DeviceTree

How am I suppose to know when the organisation itself doesn't!

Conor Dooley

conor

@monsieuricon been getting an error 500 accessing my "home timeline" on the social.kernel.org website. "Public timeline" works though. Any idea what may be the cause?

Conor Dooley

conor

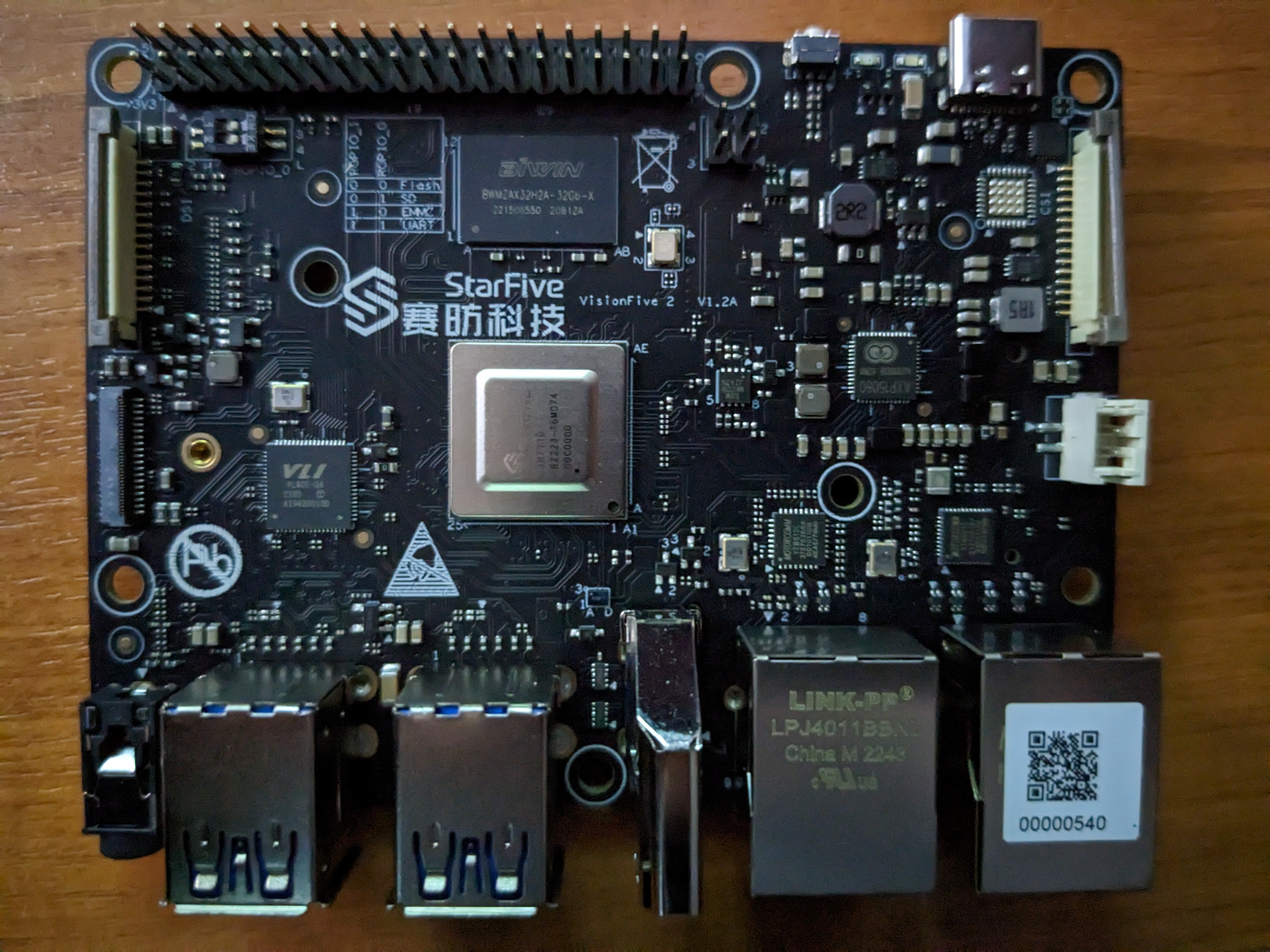

Welp, my visionfive v2 might be a little on the kaput side?

Been running the same boot process since I got my board.

tftp a fitImage w/ initramfs and then boot w/ bootm as might be expected.

First time I powered it on today, it bootlooped once but then worked properly.

Rebooted it and since then it's been bootlooping in U-Boot non stop.

`bootm ramdisk` or `bootm loados` tend to be where it dies.

Randomly had it start working again just now, while manually entering the commands for a couple boots in a row.

Allowing autoboot, which runs the same commands, didn't.

Back to not working at all again now.

🤔

Been running the same boot process since I got my board.

tftp a fitImage w/ initramfs and then boot w/ bootm as might be expected.

First time I powered it on today, it bootlooped once but then worked properly.

Rebooted it and since then it's been bootlooping in U-Boot non stop.

`bootm ramdisk` or `bootm loados` tend to be where it dies.

Randomly had it start working again just now, while manually entering the commands for a couple boots in a row.

Allowing autoboot, which runs the same commands, didn't.

Back to not working at all again now.

🤔

Conor Dooley

conor

https://social.kernel.org/notice/ARVWmKdhWckD0iaCWm

Looked at this again today, having fixed some build issues in the pinctrl driver. Looks like the patches posted to the ML do the right thing after all, woops!

I had been trying the "upstream" branch in their GitHub repo, so that I didn't have to fix the pinctrl driver in order to try the board out.

The branch is older than I thought it was, so perhaps it was not a good idea to do that & I came a cropper as a result.

I feel terrible about this sort of thing, I hope I didn't waste too much of their time.

Looked at this again today, having fixed some build issues in the pinctrl driver. Looks like the patches posted to the ML do the right thing after all, woops!

I had been trying the "upstream" branch in their GitHub repo, so that I didn't have to fix the pinctrl driver in order to try the board out.

The branch is older than I thought it was, so perhaps it was not a good idea to do that & I came a cropper as a result.

I feel terrible about this sort of thing, I hope I didn't waste too much of their time.

Conor Dooley

conor

2022 contribution recap

Show content

I usually hate talking about anything that I've done, but reading Nathan's

yearly recap* inspired me to bite the bullet... so here's a wee bit of a

recap of my one, from what we in Ireland call a blow-in :)

* https://nathanchance.dev/posts/2022-cbl-retrospective/

## Linux

Before 2022, I had one driver and it's bindings upstreamed & had sent a v1 of

updating the initial PolarFire SoC/Icicle device tree to something closer to

reality.

2022 was a little different, and I've kinda dived into working upstream.

Overall it's been great, and I've spent the year learning non-stop.

Engaging with upstream has lead to development of my skills that my small

(and very busy) team at work was not able to provide.

By-and-large, everyone I've worked with has been nice and/or helpful, so thanks

to everyone that's helped me along the way :)

### Things I managed to finish

172 non-merge commits:

- 50 for devicetrees

- 45 for dt-bindings

- 113+ explicitly PolarFire SoC related

In summary:

- overhaul & peripheral additions to the PolarFire SoC/Icicle device tree

- upstreamed dts for other dev kits

- upstreamed PolarFire SoC clk, spi, i2c, rtc, reset, musb, sys controller &

hwrng drivers

- re-wrote PolarFire SoC's clk driver to actually match the clock parentage in

hardware & created a driver for fabric clocks in the process

- cleaned up all RISC-V device trees to the point that we were error free, in

the process doing a fair few of the 45 binding patches

- fixed RISC-V's topology reporting :)

- started the conversion process from SOC\_FOO to ARCH\_FOO & cleaned up a lot

of Kconfig.socs, but there's more work to be done there!

- fixes for when the above went wrong!

So a fair bit different to my previous years contributions :)

### Other efforts

160 other contribtions:

- 28 Acked-by

- 21 Reported-by

- 45 Tested-by, enough to feature in the top tests for one release...

- 81 Reviewed-by

Some of the above applies to issues people spotted with my contributions, but

the majority came in the second half of the year as I've gotten more involved

with reviewing stuff aimed at linux-riscv.

C3\_STOP was enabled for the RISC-V timer driver, sending me on a lengthy

bisection to find the root cause - it had been enabled as the letter-of-the-spec

compliant thead arch timer implementation needs it, but without the required

broadcast timer setup done! Between the SBI spec, the regression reports and

the various patches it took ~3 months to actually get fixed - but was a fun one

due to the confusion from all parties. Thanks in particular to Samuel Holland

for figuring out /why/ C3\_STOP broke stuff.

I initially took on applying patches for the mpfs devicetrees at some point

in Q2 or so. More recently, I have taken over all RISC-V devicetrees that are

without specific maintainership now that RISC-V dts patches are routed via the

soc tree. Ditto for drivers/{soc,firmware}, although the later does not yet

exist for RISC-V.. Hopefully that all makes Palmer's life easier and the patch

application time shorter!

### LPC

LPC was in my backyard this year, so I went along to that.

Not really one for public speaking, but I ended up talking about how what to

do with Kconfig.socs for RISC-V.

No intention of repeating that next year, that's for sure.

The main benefit of LPC though was meeting people for the first time.

In particular, meeting Palmer kicked off the work we've done in getting the

linux-riscv patchwork instance up and running.

### Patchwork/CI

After LPC, we wiped the patchwork instance of old content. Bjorn Topel ported

NIPA to the instance & I got it running in the Microchip FPGA CI. We've since

done a bit of work on finishing that port & sorting out some niggling issues

with the error reporting. Palmer runs a weekly patchwork sync meeting now & it

appears to have made both sides of the upstreaming process easier..

Additionally, I realised that RISC-V has little test coverage. I added a bunch

of pipelines to our internal CI that tests linux-next, stable kernels we care

about, and of course mainline. Found a few bugs in the process, including the

aforementioned C3\_STOP issue!

## QEMU

A handful of patches, perhaps 10-15. I fixed the direct kernel boot for

PolarFire SoC on recent kernels & did some general virt/spike machine dtb

cleanup. Maybe in 2023 I'll submit some more support for mpfs peripherals, but

that entirely depends on what I need to use QEMU to debug!

## OpenSBI

Two minor docs fixes! Not much to speak of really. I'd imagine that 2023 will

be relatively similar contribution wise.

## U-Boot

Not really much to speak of here either. Apart from porting trivial bugfixes

from Linux to U-Boot, I re-worked the clock driver for MPFS so that it could

handle both the incorrect devicetree that was upstreamed originally & the one

which aligns with the dt-binding. If only things weren't upstreamed before

their bindings were approved...

Beyond stuff that is directly $dayjob related, I think I have single review tag

in the whole year. Hopefully there'll be more there in 2023.

As with Linux, I have set up internal CI to test U-Boot versions that we care

about.

## Conclusion

As you can tell, one thing absorbs most of my time in terms of upstream work. I

don't see that changing in 2023. Perhaps in 2023 I'll submit a driver written in

Rust. I struggle for motivation without a goal, so writing one would be a nice

jump into more interesting Rust code than the two TUI apps I wrote in 2022. One

of those runs my board farm and, while basic, is something I use every day.

Or perhaps I'll check out rustSBI...

yearly recap* inspired me to bite the bullet... so here's a wee bit of a

recap of my one, from what we in Ireland call a blow-in :)

* https://nathanchance.dev/posts/2022-cbl-retrospective/

## Linux

Before 2022, I had one driver and it's bindings upstreamed & had sent a v1 of

updating the initial PolarFire SoC/Icicle device tree to something closer to

reality.

2022 was a little different, and I've kinda dived into working upstream.

Overall it's been great, and I've spent the year learning non-stop.

Engaging with upstream has lead to development of my skills that my small

(and very busy) team at work was not able to provide.

By-and-large, everyone I've worked with has been nice and/or helpful, so thanks

to everyone that's helped me along the way :)

### Things I managed to finish

172 non-merge commits:

- 50 for devicetrees

- 45 for dt-bindings

- 113+ explicitly PolarFire SoC related

In summary:

- overhaul & peripheral additions to the PolarFire SoC/Icicle device tree

- upstreamed dts for other dev kits

- upstreamed PolarFire SoC clk, spi, i2c, rtc, reset, musb, sys controller &

hwrng drivers

- re-wrote PolarFire SoC's clk driver to actually match the clock parentage in

hardware & created a driver for fabric clocks in the process

- cleaned up all RISC-V device trees to the point that we were error free, in

the process doing a fair few of the 45 binding patches

- fixed RISC-V's topology reporting :)

- started the conversion process from SOC\_FOO to ARCH\_FOO & cleaned up a lot

of Kconfig.socs, but there's more work to be done there!

- fixes for when the above went wrong!

So a fair bit different to my previous years contributions :)

### Other efforts

160 other contribtions:

- 28 Acked-by

- 21 Reported-by

- 45 Tested-by, enough to feature in the top tests for one release...

- 81 Reviewed-by

Some of the above applies to issues people spotted with my contributions, but

the majority came in the second half of the year as I've gotten more involved

with reviewing stuff aimed at linux-riscv.

C3\_STOP was enabled for the RISC-V timer driver, sending me on a lengthy

bisection to find the root cause - it had been enabled as the letter-of-the-spec

compliant thead arch timer implementation needs it, but without the required

broadcast timer setup done! Between the SBI spec, the regression reports and

the various patches it took ~3 months to actually get fixed - but was a fun one

due to the confusion from all parties. Thanks in particular to Samuel Holland

for figuring out /why/ C3\_STOP broke stuff.

I initially took on applying patches for the mpfs devicetrees at some point

in Q2 or so. More recently, I have taken over all RISC-V devicetrees that are

without specific maintainership now that RISC-V dts patches are routed via the

soc tree. Ditto for drivers/{soc,firmware}, although the later does not yet

exist for RISC-V.. Hopefully that all makes Palmer's life easier and the patch

application time shorter!

### LPC

LPC was in my backyard this year, so I went along to that.

Not really one for public speaking, but I ended up talking about how what to

do with Kconfig.socs for RISC-V.

No intention of repeating that next year, that's for sure.

The main benefit of LPC though was meeting people for the first time.

In particular, meeting Palmer kicked off the work we've done in getting the

linux-riscv patchwork instance up and running.

### Patchwork/CI

After LPC, we wiped the patchwork instance of old content. Bjorn Topel ported

NIPA to the instance & I got it running in the Microchip FPGA CI. We've since

done a bit of work on finishing that port & sorting out some niggling issues

with the error reporting. Palmer runs a weekly patchwork sync meeting now & it

appears to have made both sides of the upstreaming process easier..

Additionally, I realised that RISC-V has little test coverage. I added a bunch

of pipelines to our internal CI that tests linux-next, stable kernels we care

about, and of course mainline. Found a few bugs in the process, including the

aforementioned C3\_STOP issue!

## QEMU

A handful of patches, perhaps 10-15. I fixed the direct kernel boot for

PolarFire SoC on recent kernels & did some general virt/spike machine dtb

cleanup. Maybe in 2023 I'll submit some more support for mpfs peripherals, but

that entirely depends on what I need to use QEMU to debug!

## OpenSBI

Two minor docs fixes! Not much to speak of really. I'd imagine that 2023 will

be relatively similar contribution wise.

## U-Boot

Not really much to speak of here either. Apart from porting trivial bugfixes

from Linux to U-Boot, I re-worked the clock driver for MPFS so that it could

handle both the incorrect devicetree that was upstreamed originally & the one

which aligns with the dt-binding. If only things weren't upstreamed before

their bindings were approved...

Beyond stuff that is directly $dayjob related, I think I have single review tag

in the whole year. Hopefully there'll be more there in 2023.

As with Linux, I have set up internal CI to test U-Boot versions that we care

about.

## Conclusion

As you can tell, one thing absorbs most of my time in terms of upstream work. I

don't see that changing in 2023. Perhaps in 2023 I'll submit a driver written in

Rust. I struggle for motivation without a goal, so writing one would be a nice

jump into more interesting Rust code than the two TUI apps I wrote in 2022. One

of those runs my board farm and, while basic, is something I use every day.

Or perhaps I'll check out rustSBI...

Conor Dooley

conor

https://social.kernel.org/notice/ARTnwlSzwjbS0yDXJA

I figured out my problem... The Quick Start Guide says use a particular uart, U-Boot uses said uart & the "devel" branch of the StarFive GitHub uses it too.

But for some reason the patches for Linux do use a different uart as stdout-path!

I figured out my problem... The Quick Start Guide says use a particular uart, U-Boot uses said uart & the "devel" branch of the StarFive GitHub uses it too.

But for some reason the patches for Linux do use a different uart as stdout-path!

Conor Dooley

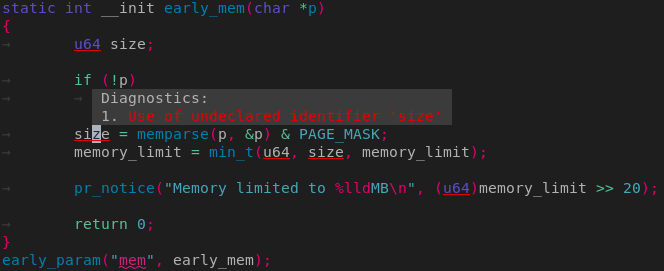

conor

The diagnostics from clangd really suffer from too many false positives. Mostly ends up getting ignored, even when it's right, as a result :/

Conor Dooley

conor

I guess since there is no quote retweet here,

https://social.kernel.org/notice/ARLXuuUYGeIWtYMHbs

>every new contributor has to build that list from scratch and suffer until they figured it out.

Whenever I write something critical I think of one of the reviews I received early on, for something that was admittedly sub-par, and hope not to come across the way that email did.

https://social.kernel.org/notice/ARLXuuUYGeIWtYMHbs

>every new contributor has to build that list from scratch and suffer until they figured it out.

Whenever I write something critical I think of one of the reviews I received early on, for something that was admittedly sub-par, and hope not to come across the way that email did.

Conor Dooley

conor

Heh, this would've been nice to have attended year ago:

https://fosdem.org/2023/schedule/event/pwm/

"The audience learns the general concept of PWMs, about the corner cases in their usage and driver design, and how to avoid the common pitfalls often pointed out to authors of new PWM drivers during the review process."

https://fosdem.org/2023/schedule/event/pwm/

"The audience learns the general concept of PWMs, about the corner cases in their usage and driver design, and how to avoid the common pitfalls often pointed out to authors of new PWM drivers during the review process."

Conor Dooley

conor

Listening to a podcast for the first time, where the hosts do not introduce themselves before bringing in their guest is *super* disconcerting.

Now I got 3 voices that I cannot tell apart, rather than having some time to acclimatise to the hosts...

Now I got 3 voices that I cannot tell apart, rather than having some time to acclimatise to the hosts...

Conor Dooley

conor

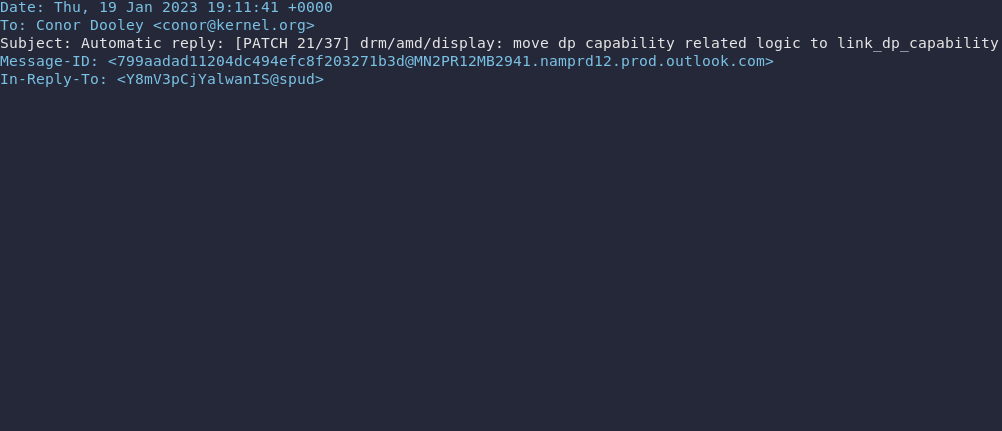

kernel.org, protonmail & wkd: incompatibility

Show content

I ran afoul of this about a year ago, and have since noticed others having the same issue a few times since.

Kernel.org publishes the WKD for all developers who have kernel.org accounts, which is grand - but protonmail has a feature where it will automagically check for WKD and use it to encrypt messags.

Mailing lists & recipients without WKD will get the regular old copy of the mail.

To quote one of the other people that ran into this:

"they told me then that it's a super-pro

builtin feature that I can't disable 🤡"

That's the same answer I got & for both of us, the solution was just to leave protonmail.

Maintainers don't know about this & get "annoyed" with patch submitters & it is equally frustrating on the other side trying to figure out why your patchset has been encrypted...

The most recent occurance of this is here:

https://lore.kernel.org/all/Y6slQLto568WfmfZ@spud/

I feel like it should be documented somewhere that this issue exists, process/email-clients.txt doesn't seem quite right. Perhaps the patches documenting it should be CC the users@linux.kernel.org ML?

Kernel.org publishes the WKD for all developers who have kernel.org accounts, which is grand - but protonmail has a feature where it will automagically check for WKD and use it to encrypt messags.

Mailing lists & recipients without WKD will get the regular old copy of the mail.

To quote one of the other people that ran into this:

"they told me then that it's a super-pro

builtin feature that I can't disable 🤡"

That's the same answer I got & for both of us, the solution was just to leave protonmail.

Maintainers don't know about this & get "annoyed" with patch submitters & it is equally frustrating on the other side trying to figure out why your patchset has been encrypted...

The most recent occurance of this is here:

https://lore.kernel.org/all/Y6slQLto568WfmfZ@spud/

I feel like it should be documented somewhere that this issue exists, process/email-clients.txt doesn't seem quite right. Perhaps the patches documenting it should be CC the users@linux.kernel.org ML?

Conor Dooley

conor

USCSB videos on YouTube are so amazingly well done. I don't care really about chemical processing etc, but you bet I'll watch anything they put out..