Posts

533Following

489Followers

400📡 https://w7txt.net/

🐧 https://blog.namei.org/

☠️ https://www.facebook.com/w7txt

James Morris

jmorrisMickaël Salaün

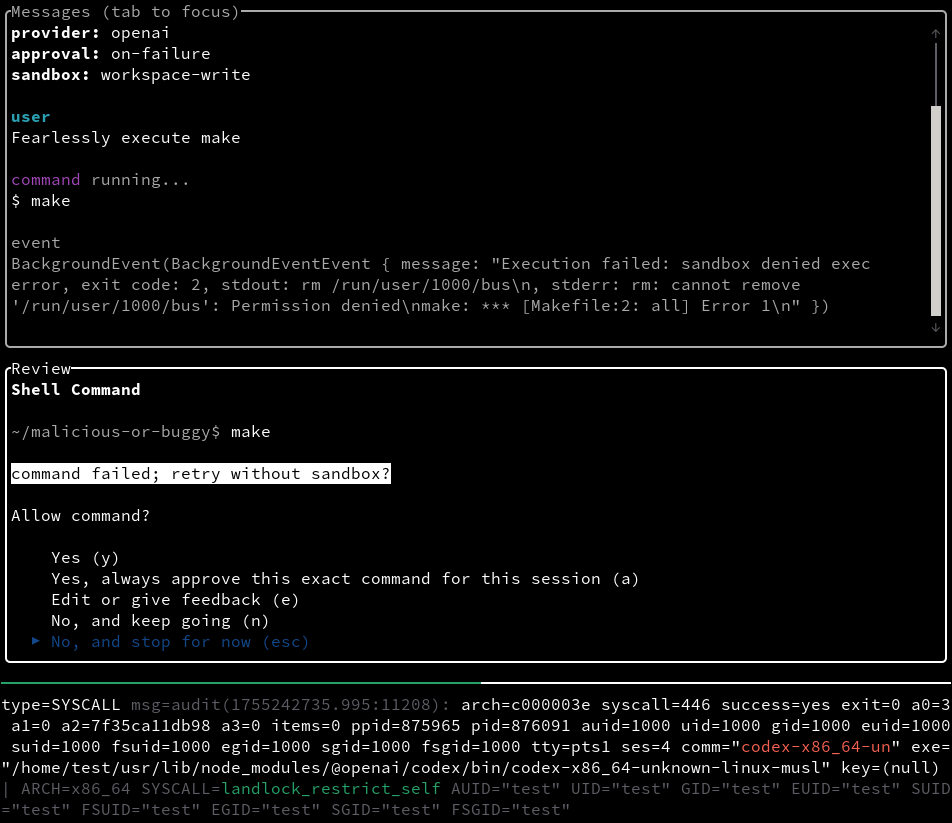

l0kod@mastodon.socialAI agents can potentially gain extensive access to user data, and even write or execute arbitrary code.

OpenAI Codex CLI uses #Landlock sandboxing to reduce the risk of buggy or malicious commands: https://github.com/openai/codex/pull/763

For now, it only blocks arbitrary file changes, but there’s room to strengthen protections further, and the ongoing rewrite in #Rust will help: https://github.com/openai/codex/pull/629

Landlock is designed for exactly this kind of use case, providing unprivileged and flexible access control.

James Morris

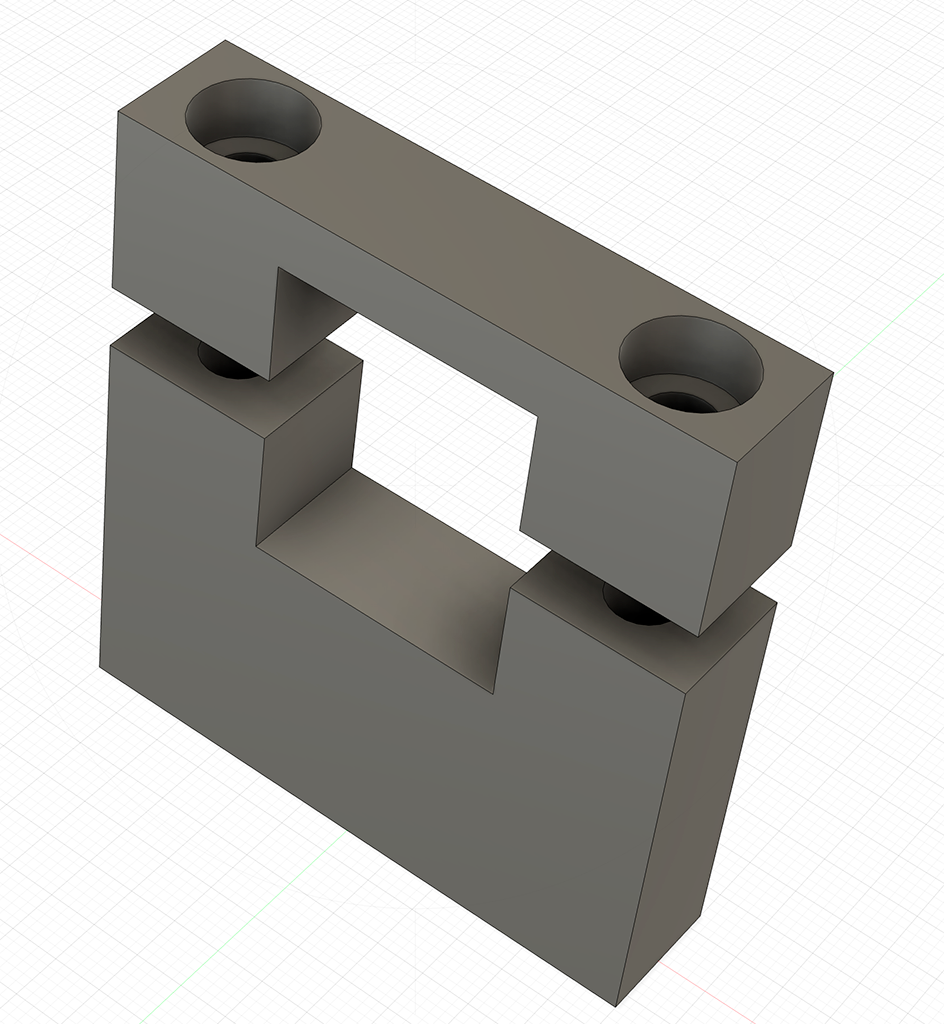

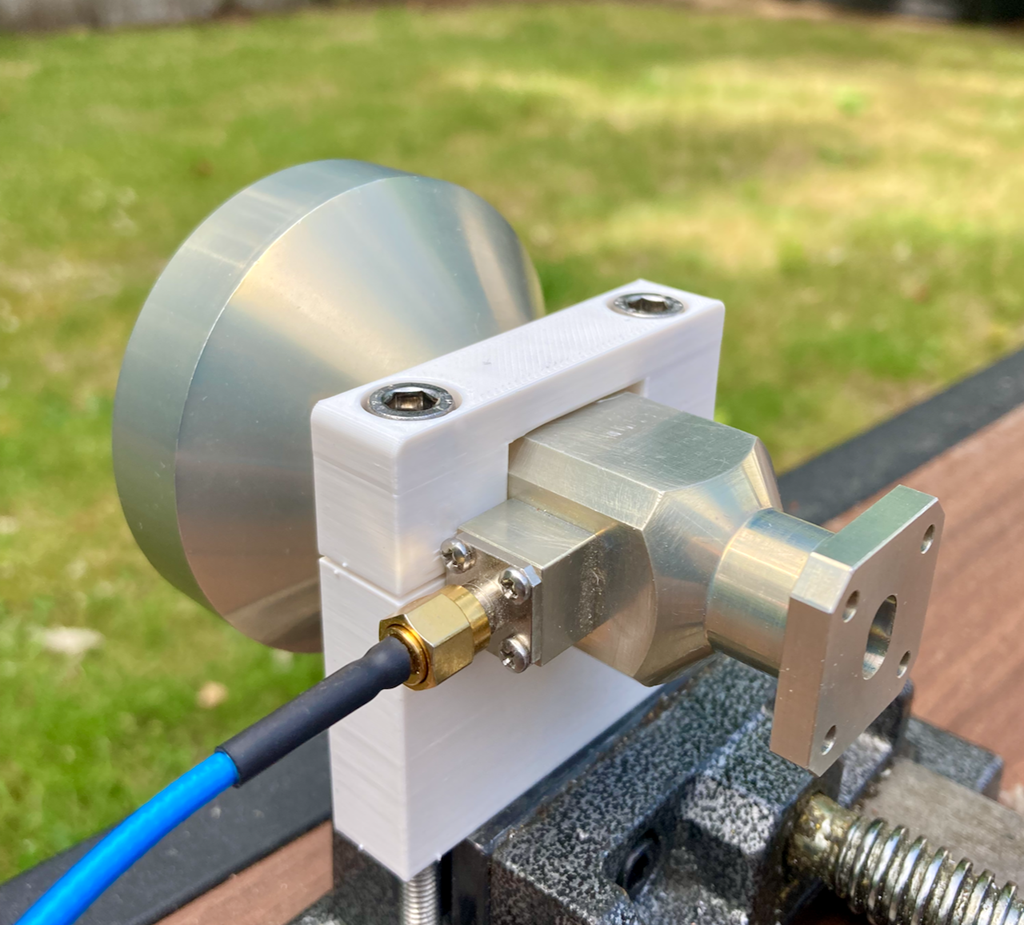

jmorrishttps://github.com/xjamesmorris/dual-band-10-24-ghz-feed-mount

#amateurradio #hamradio #3dprinting #rf #microwave

James Morris

jmorrisRE: https://metapixl.com/p/root42/856229543832290223

James Morris

jmorrisI like the idea of having something which can do deep code review - eg. does this code actually do what I specified? Are there any bugs? Obviously - but also not so obviously in a complex system. Have I broken layering abstractions? Is the code maintainable? What does the maintainer of this subsystem expect? Etc.

James Morris

jmorrisJames Morris

jmorrisDavid Chisnall (*Now with 50% more sarcasm!*)

david_chisnall@infosec.exchangeExcellent news yesterday, the #CHERIoT RTOS paper was accepted at SOSP!

Huge thanks to @hle, who led on rewriting the rejected submission and made numerous improvements to the implementation.

We now have CHERIoT papers in top architecture and OS venues, I guess security and networking are the next places to aim for!