Posts

267Following

39Followers

287#standwithukraine 🇵🇱 🇪🇺 🇺🇦 🇨🇭

IRC: krzk

Kernel work related account. Other accounts of mine: @krzk@mastodon.social

Krzysztof Kozlowski

repeated

repeated

Anne Applebaum

anneapplebaum@journa.hostToday is the annual day of remembrance for the Holodomor, the Ukrainian famine. 90 years ago Stalin sent activists to confiscate food from Ukrainian peasants. Millions died.

To mark this day, Putin sent dozens of drones to attack Kyiv.

Like Stalin, Putin wants to erase Ukraine.

Krzysztof Kozlowski

krzk

@cas @monsieuricon I cannot access BIOS on company laptop :) and even if I could I think it's still Lenovo's fault - you don't swap keys in a keyboard after 30 years of having the CTRL at that place :) (Y looks at Z.... anyone uses German keyboards? :) )

Krzysztof Kozlowski

krzk

@monsieuricon There is a special place in hell for designers who swapped CTRL and Fn... I am staying away from Lenovo, but it is tricky - many companies buy these for employees. :(

Krzysztof Kozlowski

krzk

Edited 2 years ago

Looking at Devicetree sources changes (DTS) could give some insight into which ARM64 platforms are the most active. DTS represents the real hardware, thus any new hardware-block for an existing SoC, new SoC or new board, require new DTS changes. It's an approximation, easy to measure and still quite informative. Let's take a look - the most developed ARM64 platforms in the upstream Linux kernel since last LTS (v6.1):

$ git diff --dirstat=changes,1 v6.1..v6.6 -- arch/arm64/boot/dts/ | sort -nr

44.6% arch/arm64/boot/dts/qcom/

12.2% arch/arm64/boot/dts/ti/

10.4% arch/arm64/boot/dts/freescale/

10.1% arch/arm64/boot/dts/rockchip/

5.0% arch/arm64/boot/dts/nvidia/

4.3% arch/arm64/boot/dts/mediatek/

3.9% arch/arm64/boot/dts/renesas/

3.5% arch/arm64/boot/dts/apple/

3.4% (the rest)

2.2% arch/arm64/boot/dts/amlogic/

Almost 45% of all changes to ARM64-related hardware in the upstream kernel were for Qualcomm SoCs and boards. That's quite a stunning number.

$ git diff --dirstat=changes,1 v6.1..v6.6 -- arch/arm64/boot/dts/ | sort -nr

44.6% arch/arm64/boot/dts/qcom/

12.2% arch/arm64/boot/dts/ti/

10.4% arch/arm64/boot/dts/freescale/

10.1% arch/arm64/boot/dts/rockchip/

5.0% arch/arm64/boot/dts/nvidia/

4.3% arch/arm64/boot/dts/mediatek/

3.9% arch/arm64/boot/dts/renesas/

3.5% arch/arm64/boot/dts/apple/

3.4% (the rest)

2.2% arch/arm64/boot/dts/amlogic/

Almost 45% of all changes to ARM64-related hardware in the upstream kernel were for Qualcomm SoCs and boards. That's quite a stunning number.

Krzysztof Kozlowski

repeated

repeated

K. Ryabitsev-Prime 🍁

monsieuricon

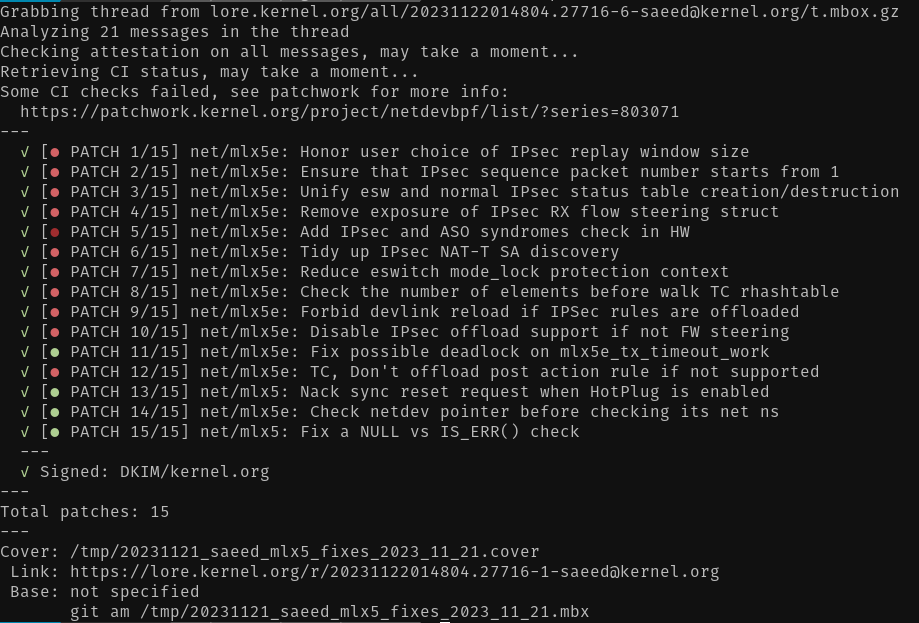

When patchwork integration is configured, b4 will now retrieve the CI status of each patch as well.

Krzysztof Kozlowski

krzk

@ptesarik @oleksandr @suse @kernellogger v2.6.40 is a development kernel, so it's actually perfectly fine and valid release. :)

Krzysztof Kozlowski

krzk

Edited 2 years ago

@ptesarik @oleksandr @kernellogger @suse Appreciate @suse kernels? That's bollocks! Suse has the same 4baf12181 commit in their SLE15-SP6 tree! Whole team of engineers backported the same commit questioned here, instead of working on upstream stable backports, and this should be the argument to "appreciate" @suse.

You get absolutely nothing with enterprise kernels. If bug is in the mainline or upstream stable, Suse and Redhat have the bug as well.

You get absolutely nothing with enterprise kernels. If bug is in the mainline or upstream stable, Suse and Redhat have the bug as well.

Krzysztof Kozlowski

krzk

@oleksandr @kernellogger @ptesarik @suse Although to be honest, that v2.6 (v2.6.32 to be specific) was kept as SLTS by the community till 2016.

Krzysztof Kozlowski

krzk

@oleksandr @suse @kernellogger @ptesarik

v4.14 is a SLTS, so it is old indeed, but we do not compare it with RHEL v4.18. Few years ago (2020) RHEL was still updating v3.10. This is the Uber-Franken-kernel we talk about.

In 2018 RHEL released v2.6 kernel. v2.6, can you imagine?

And do you know that also features get backported to enterprise distro Frankenkernels?

v4.14 is a SLTS, so it is old indeed, but we do not compare it with RHEL v4.18. Few years ago (2020) RHEL was still updating v3.10. This is the Uber-Franken-kernel we talk about.

In 2018 RHEL released v2.6 kernel. v2.6, can you imagine?

And do you know that also features get backported to enterprise distro Frankenkernels?

Krzysztof Kozlowski

krzk

@oleksandr @suse @kernellogger @ptesarik "Frankenkernel" is an very old kernel which consists of thousands of backports, thus like Frankenstein.

Stable kernels are not that, because they cease to exist at some point. You need to move to newer kernel, thus backporting stops. Beyond that point, it's the distro (Redhat, Suse, also Canonical for some extended support) who creates the Frankenkernel.

And if you ever want to call stable upstream a "Frankenkernel", then Suse and Redhat create Uber-super-Franken-master-kernel...

Stable kernels are not that, because they cease to exist at some point. You need to move to newer kernel, thus backporting stops. Beyond that point, it's the distro (Redhat, Suse, also Canonical for some extended support) who creates the Frankenkernel.

And if you ever want to call stable upstream a "Frankenkernel", then Suse and Redhat create Uber-super-Franken-master-kernel...

Krzysztof Kozlowski

krzk

Edited 2 years ago

@ptesarik @oleksandr @kernellogger @suse Oh yes, the franken-kernels with selected fixes for 4 year old kernel... Or maybe even older, because Suse customers do not like to update and test their stuff (reasonable, no one likes testing!).

Krzysztof Kozlowski

krzk

@broonie @kernellogger @cas Any CI you want to setup requires learning its fundamentals and its tools. The CI from the forges is no different here - it only brings some different tools.

I've been setting up different CI systems (Jenkins, Buildbot, Github and Gitlab) and the forges ones are really easy to start with. Complex things are complex everywhere, so I would not call it as a problem.

Plus you can opt-out and use your own CI, via some git-forge-hooks (if we talk about testing patches during review) or directly by git hooks / git fetch (if we talk about testing applied contributions).

I've been setting up different CI systems (Jenkins, Buildbot, Github and Gitlab) and the forges ones are really easy to start with. Complex things are complex everywhere, so I would not call it as a problem.

Plus you can opt-out and use your own CI, via some git-forge-hooks (if we talk about testing patches during review) or directly by git hooks / git fetch (if we talk about testing applied contributions).

Krzysztof Kozlowski

krzk

@oleksandr @kernellogger That's not the problem of stable backporting process. At that stage it is not known that this was part of 16 patches features set. It is backported from Git. At this stage all the patchset-hierarchy is gone and not really important. What's in the Git tree is important.

The problem here was the original submission:

1. Mixing fixes with features in same patchset.

2. Putting fixes into the middle of a patchset.

3. And maybe: tagging commit as "Fixes" for something not being fix.

Therefore please complain to the submitter and optionally to the maintainer, not to the stable backport fix.

Otherwise please explain me why a fix for a known bug should not be backported, as I described in previous post.

The problem here was the original submission:

1. Mixing fixes with features in same patchset.

2. Putting fixes into the middle of a patchset.

3. And maybe: tagging commit as "Fixes" for something not being fix.

Therefore please complain to the submitter and optionally to the maintainer, not to the stable backport fix.

Otherwise please explain me why a fix for a known bug should not be backported, as I described in previous post.

Krzysztof Kozlowski

krzk

@oleksandr @kernellogger No, it is not an AI. It's simple and fixed decision: Is this commit fixing a bug in previous release? If yes, then let's backport to fix that bug.

Now you claim that "Fixes" tag is for commits not fixing bugs or bugs are not important. In the first case, Fixes tag would be added incorrectly to the commit. It is purely for fixing known bugs. In the second case, how do you know which bugs are important and which are not? All bugs are bugs which we want to fix...

Now you claim that "Fixes" tag is for commits not fixing bugs or bugs are not important. In the first case, Fixes tag would be added incorrectly to the commit. It is purely for fixing known bugs. In the second case, how do you know which bugs are important and which are not? All bugs are bugs which we want to fix...

Krzysztof Kozlowski

krzk

Edited 2 years ago

@kernellogger @cas I am all in for some way of Git-forges. Not necessarily Github or Gitlab (cloud or self-hosted), but something where setting up workflows/pipelines/CI is trivial. Now, to test patches I need to set up my own CI. Other maintainer needed his own. Other needed one more. And all these CIs are not good enough, because testing patches from Patchwork or mailing list requires few additional steps. I don't want to spend my time on writing CI or CI-like-scripts to interact with Patchwork. I want to write a set of simple rules or commands to check every patch sent (or commit) and output warnings with success/error status.

One can do it easily on a Git forge.

One can do it easily on a Git forge.

Krzysztof Kozlowski

krzk

@kernellogger Yep, I am all in for the bots.

Checkpatch is garbage, but it is the only garbage we have, so till someone writes something better, please use it.

Checkpatch is garbage, but it is the only garbage we have, so till someone writes something better, please use it.

Krzysztof Kozlowski

krzk

Edited 2 years ago

Just reviewed a patch with... four comments coming entirely from my templates. No need to write anything, just hit some templates. Two out of these four comments were for not using in-kernel tools for patch submission/testing (checkpatch.pl and get_maintainer.pl). Eh. :(

Krzysztof Kozlowski

repeated

repeated

@marcan tbh I find this and related posts quite toxic. The IOMMU maintainers are nice guys (one of them is my team colleague) and they don't deserve to have people reading your posts getting the impression that their subsystem is crap.

In the thread you mention doing some unusual (or maybe first time) scenario with it. It's completely normal to encounter bugs in such situations and simply fix them along the way, no need to gloat about it.

In the thread you mention doing some unusual (or maybe first time) scenario with it. It's completely normal to encounter bugs in such situations and simply fix them along the way, no need to gloat about it.