Posts

4383Following

373Followers

582kravietz 🦇

kravietz@agora.echelon.plChecking out the details of the #Russia attack on the #Czechia ammunition depot in Vrbětice in 2014, I read with some amusement the then Prime Minister Andrej Babiš’s excuses of how “Russian agents were not attacking the Czech Republic, they were attacking the property of a Bulgarian company”, and then further excuses them that “the cargo was supposed to explode on the way, not in the Czech Republic”.

That was truly an awesome excuse! The Russians wanted the explosion to happen on the road in some town or on the highway, but it exploded on the spot - okay, time fuses are complicated, not their fault!

To put it bluntly, if then in 2014 there had been a determined EU and #NATO response to that attack of an absolutely belligerent and aggressive nature (people died) - such as in 2021-2022 i.e. sanctions and a determined expulsion of Russian personnel etc. - then probably the war in 2022 would not have broken out at all.

Instead, a few years later, NATO issued a lame statement about “expressing solidarity with the Czech people” - I wonder why, since according to Babiš it was not even the Czech Republic that was attacked? Or was it, after all?

And it only issued it because Bellingcat revealed the real perpetrators of the attack, which the Czech government had apparently been withholding all this time…. presumably so as “not to escalate the situation”!

Filip Piekniewski

filippie509@techhub.socialI know every tech bro will squeak like a seal now, but just to keep things in perspective, on:

- 6'th flight Saturn V took man to the Moon 55y ago

- but but it wasn't reusable!

- 6'th flight space shuttle took a crew of 4 on a 5d mission, landed safely in Edwards base 41y ago

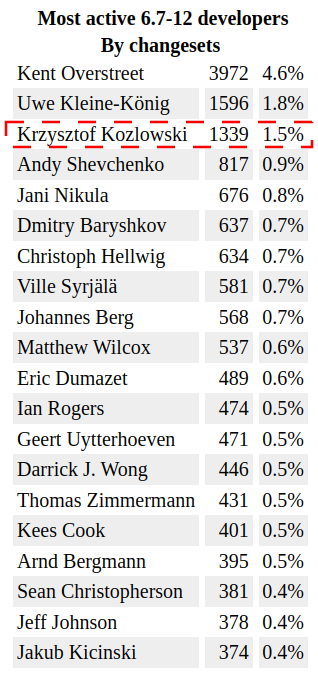

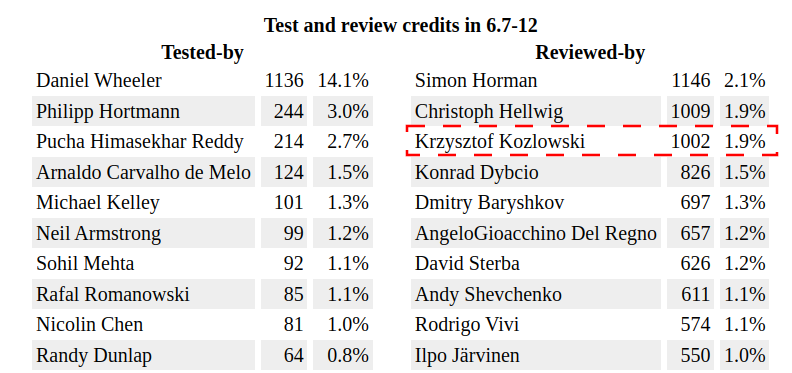

Krzysztof Kozlowski

krzkI am however more proud of another impact I made: I am one of the most active reviewers of the last one year of Linux kernel development. Reviewing takes a lot of time, a lot of iterations, a lot of patience, a lot of template answers and results with only "some" of reviewed-by credit going to Linux kernel git history. Yet here I am: ~1000 reviewed-by credits for last year v6.7 - v6.12 Linux kernel.

Source, LWN.net:

https://lwn.net/SubscriberLink/997959/377cf2f076306247/

Lorenzo Stoakes

ljsA govt that regularly disappears political opponents and plans to invade democratic Taiwan, and if course is the principle supporter of Putin in his invasion of Ukraine, without which that wouldn't be possible.

It continues to amaze me how stupid people are when evil is so obvious as this. But I guess people like cheap goods better than a spine.

https://www.bbc.co.uk/news/articles/cx2l4eynl4zo

HAMMER SMASHED FILESYSTEM 🇺🇦

lkundrak@metalhead.clubday 1000 of a terrorist state busy murdering and torturing innocent men, women and children instead of caring for their own. standing firmly in the middle ages, slaves to a psychopathic czar, with the feeling of supremacy paralleled only by the other great leader, their only friend.

yet there are politicians who are busy telling those who are sheltered from this monumental injustice by sheer luck that they're the somehow the real victims when asked to give, not their lives, but a mere share of their wealth to defence aid.

this is not only an absolutely disgusting failure of compassion and humanity, but also that of long term planning.

[1/2]

Firstyear

firstyear@infosec.exchangeWeve been working on a greenfields kerberos kdc in rust for @opensuse to support samba and nfs, especially once ntlm is removed by microsoft. So far it works for linux and mac, and were going to work on windows soon. We're going to back it with support for tpms to protect secrets too, im order to ensure its the most secure pam module out there. Long road ahead but so worth.

European Commission

EUCommission@ec.social-network.europa.euTomorrow will mark 1,000 days since Russia's full-scale invasion of Ukraine.

On this despicable anniversary, we honour the brave Ukrainians working and fighting for a free Ukraine with the colours of its flag on our headquarters.

Our enduring friendship and solidarity are here to stay. So is our commitment to work shoulder to shoulder towards a peaceful future within our Union.

Слава Україні! Europe is with you!

HAMMER SMASHED FILESYSTEM 🇺🇦

lkundrak@metalhead.clubomg sir @vbabka you made the shittiverse go crazy!! 🎖️

Vlastimil Babka

vbabkaHope someone finds this useful. http://lore.kernel.org/all/3b09bf98-9bd4-465b-b9c5-5483a6261dc7%40suse.cz

The youtube videos: https://www.youtube.com/watch?v=m9dZkRwWEj8

https://youtu.be/OvLEx6fPVrg

Oleksandr Natalenko, MSE

oleksandr@natalenko.nameIn quite a number of Kconfig help text entries I see this: "If unsure, say N."

But that raises the question: How can I be sure?

Glad LKML turns to existential questions too.

https://lore.kernel.org/lkml/D5GVI1Q30BTS.1ZVQ4YC4OJYEL@cknow.org/

European Commission

EUCommission@ec.social-network.europa.euA spark of freedom can light up the future of many.

#OnThisDay, 35 years ago, the Velvet Revolution began in Prague.

When people took to the streets in the autumn of 1989, they risked their own freedom to achieve it for all.

Their unity inspired Europe and the world, and their peaceful revolution changed the course of history forever.

MAKS 24 👀🇺🇦

MAKS23@mastodon.social‼️ 🤬 Massive Russian attack today:

▪️Russia attacks Ukraine's electricity generation and transmission facilities;

▪️Emergency power outages are starting to be introduced across the country;

▪️This is a combined attack. Different types of missiles, UAVs. Air defence works in many areas;

▪️Poland raised combat aviation;

▪️There are strikes in Mykolaiv (already 2 dead, 6 injured), as well as in Rivne, Kremenchuk, Dnipro, Odesa and Kryvyi Rih. In Kyiv – falling debris, 2 injured.

Brodie Robertson

BrodieOnLinux@mstdn.socialIntel's 3888.9% Linux Kernel Boost, Isn't What It Seems #Linux #YouTube https://youtu.be/OvLEx6fPVrg

Lorenzo Stoakes

ljsLorenzo Stoakes

ljshttps://donorbox.org/safe-skies-matching

Soatok Dreamseeker

soatok@furry.engineerWhat To Use Instead of PGP

It's been more than five years since The PGP Problem was published, and I still hear from people who believe that using PGP (whether GnuPG or another OpenPGP implementation) is a thing they should be doing. It isn't. The part of the free and open source software community that thinks PGP is just dandy are the same kind of people that happily use…

Anastasia MacKenzie

the_anastasia@mas.toThis meeting could have been a blood ritual in the woods.

Chris Hallbeck

Chrishallbeck@mastodon.socialI like to set important emails as unread so they stay in my inbox and gradually get pushed off screen by newer important emails to never be seen again.