Posts

205Following

26Followers

442Steven Rostedt

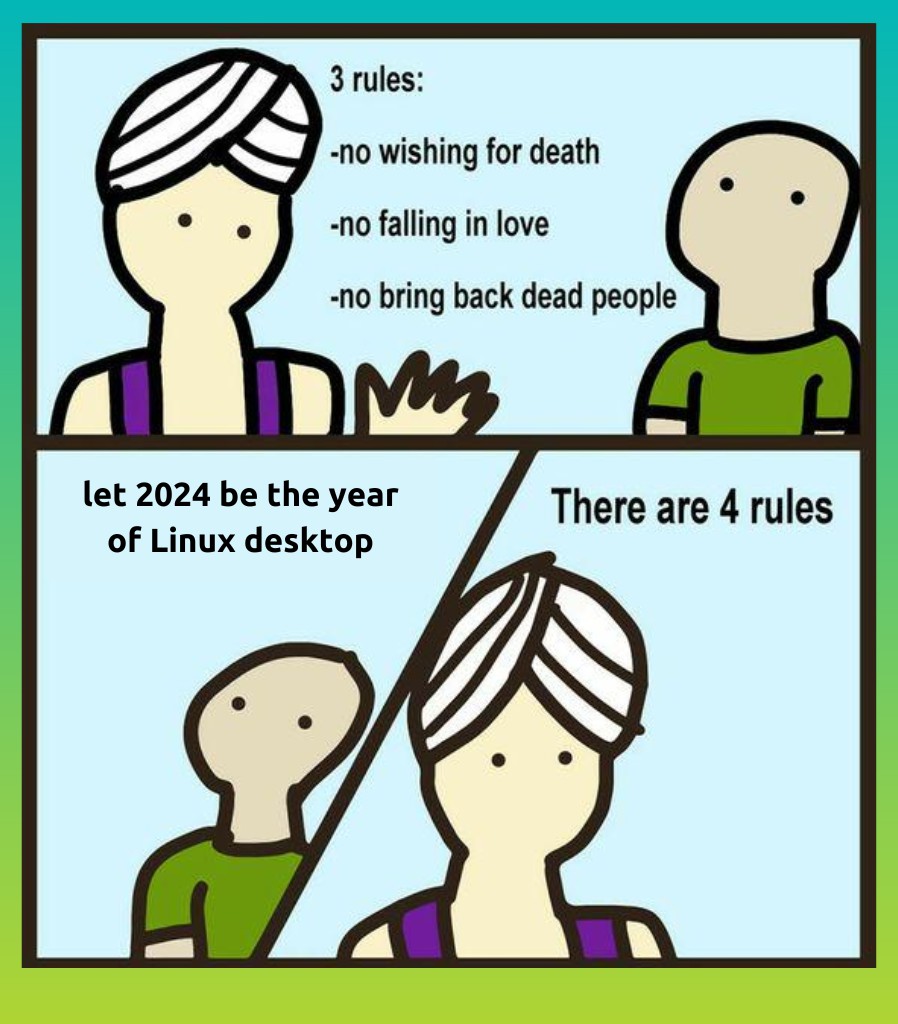

rostedt[ stolen from a colleague ]

Steven Rostedt

rostedt

Steven Rostedt

rostedtLinux Plumbers 2024 has accepted 9 Microconferences! But we had 26 submissions for 18 slots! What to do with that? Read about it here: https://lpc.events/blog/current/index.php/2024/05/03/awesome-amount-of-microconference-submissions/

Steven Rostedt

rostedt@jann @vbabka @T_X @kees Note, the CONFIG_PSTORE_FTRACE just adds hooks into the ftrace infrastructure to have it write into the pstore. What I did is different. Here you give the ftrace infrastructure a block of memory (starting address and size), and it will map its ring buffer on top of that. There’s no hooks. All functionality of ftrace will write into the that range of memory.

Yes, if pstore can give me a block of memory, I’ll use it. Really, the code I wrote just lets you use any block of memory. How I get that block of memory is part 2 of his story. 😉

Steven Rostedt

rostedthttps://lore.kernel.org/lkml/20240306015910.766510873@goodmis.org/

Steven Rostedt

rostedt@kernellogger But what if that “crazy” operation is actually documented in a man page?

mkdir /tmp/tracing

# cp -r /sys/kernel/tracing/events /tmp/tracing

# exit

$ trace-cmd sqlhist -t /tmp/tracing ...

https://trace-cmd.org/Documentation/trace-cmd/trace-cmd-sqlhist.1.html

Steven Rostedt

rostedtDoing some testing against a change; I ran two traces. Recording the trace before applying the patch as trace-b.dat and then calling the trace file after applying the patch as trace-a.dat. Then doing an ls trace*.dat I have:

trace-a.dat

trace-b.dat

And it looks like trace-a.dat should come before trace-b.dat. I’m so confused! 😛

Steven Rostedt

rostedtSteven Rostedt

rostedtI’m being “schooled” by Al Viro on how dcache, inodes, and files work internally.

This is a very interesting read that I recommend anyone that wants to understand VFS better should look at.

And don’t just stop at that email, the thread goes on. Very educational. Hopefully someone smarter than I can add this to the VFS documentation in the kernel 😉

Steven Rostedt

rostedtAllow ring buffer to have bigger sub buffers

Hmm, that subject line may not have been appropriate. 🤔

Steven Rostedt

rostedt@gregkh @vbabka @qyousef Well the problem is that it still requires manual effort to even include the clean up patch. The point I was making is that if a clean up patch causes a backport to fail, I still have to look at why instead of it just nicely being pulled in by the stable tag. The clean up in question, touched much more than the areas that failed, so it too may not backport properly.

Steven Rostedt

rostedt@qyousef @vbabka Exactly. If you do clean ups in the code that you are modifying then all is OK, because the modifications you are making will cause the backports to fail anyway, so the clean ups do not cause extra work. But if you just have random clean ups in code that hasn’t changed in years, if a bug in that code is found, then the backports are going to be a pain fixing all previous version before the “cleanup”.

Steven Rostedt

rostedt@qyousef false better than 0 is more for understanding that the value is a boolean an not to be taken as numeric. Sometimes that makes it easier to understand the logic. I’ve been trying to use boolean in those cases as well. This also is a requirement if you ever plan on using Rust 😉

Steven Rostedt

rostedtI don’t mind clean up patches, but this is the reason a lot of Linux kernel maintainers frown on them.

https://lore.kernel.org/all/2023120938-unclamped-fleshy-688e@gregkh/

This failure is because of a clean up patch that converted everything to “bool” where it could be:

https://lore.kernel.org/all/20230305155532.5549-3-ubizjak@gmail.com/T/#u

If I had not accepted that clean up, this backport would have been pulled in automatically with no extra work from myself. But because I added that clean up, I now have to fix this for every stable release before that clean up 🙁