Posts

380Following

96Followers

4386K. Ryabitsev-Prime 🍁

monsieuriconHi:

Your vulnerability report is stupid. You have no idea what you're talking about, or you're hoping that nobody actually checks your findings. Unfortunately for me, I did check your findings and I will never get these 20 minutes of my life back. Everyone is dumber as a result of your report. Please do not contact us again.

Greg K-H

gregkhGreg K-H

gregkhFor "why we we provide CPE", we provide it because some groups were "adding" CPE data that was flat out wrong because our records did not have any values. By providing the "real" CPE description, according to their specification, that mostly prevents that from happening.

CPE on its own, doesn't actually give you enough information to properly determine a "am I vulnerable" question giving a release that someone is currently on, as you well know. I guess it's good enough to search on "linux*" to filter that way :)

Hopefully PURL will help out a lot here, I have hope...

Greg K-H

gregkhhttp://www.kroah.com/log/blog/2026/02/16/linux-cve-assignment-process/

Jamie Gaskins

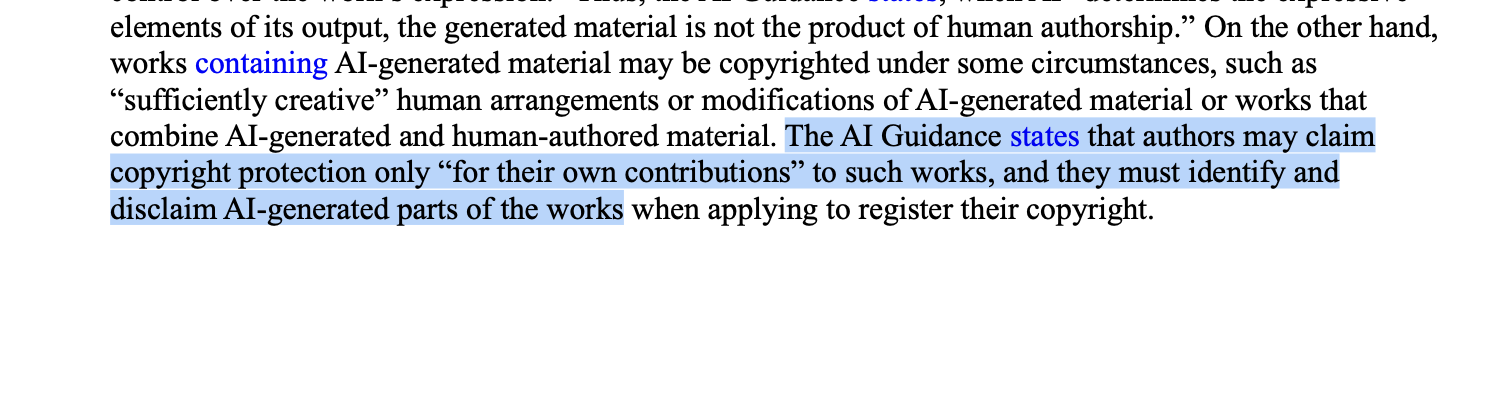

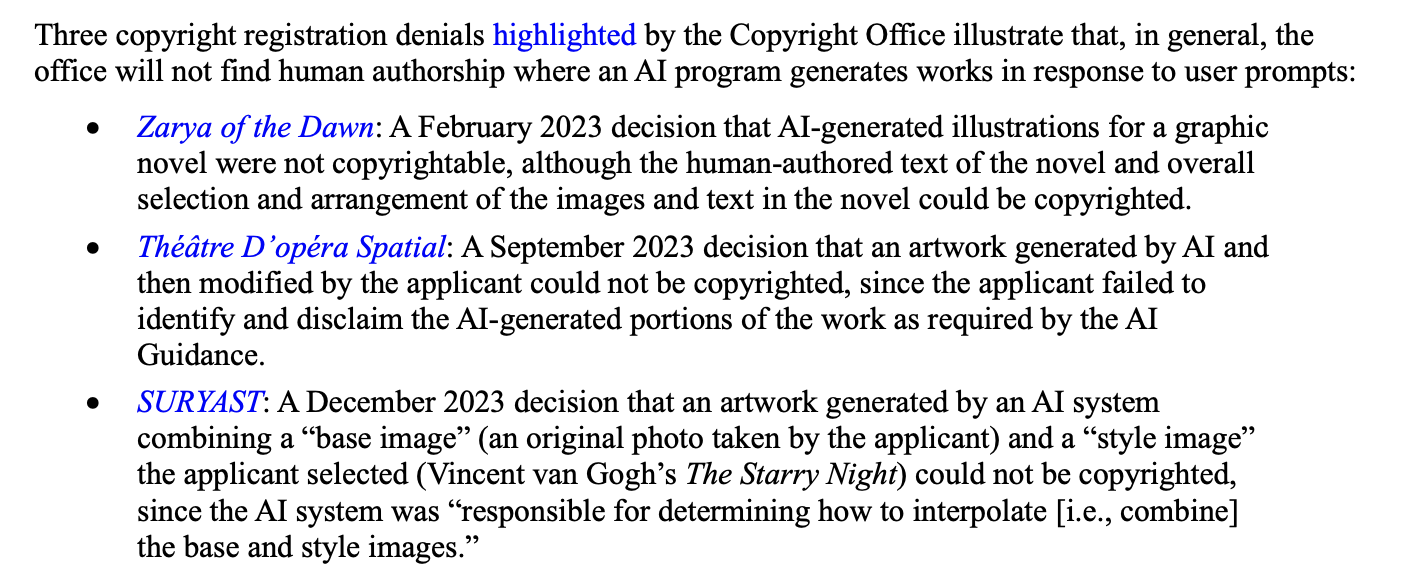

jamie@zomglol.wtfIf you use AI-generated code, you currently cannot claim copyright on it in the US. If you fail to disclose/disclaim exactly which parts were not written by a human, you forfeit your copyright claim on *the entire codebase*.

This means copyright notices and even licenses folks are putting on their vibe-coded GitHub repos are unenforceable. The AI-generated code, and possibly the whole project, becomes public domain.

Source: https://www.congress.gov/crs_external_products/LSB/PDF/LSB10922/LSB10922.8.pdf

Greg K-H

gregkhAlexandre Dulaunoy

adulau@infosec.exchange@jbm The backlash against Linux kernel advisories is confusing. We wanted transparency; now we have it. More data is always better than a black box. If the new influx of CVEs is breaking your vulnerability management workflow, the problem might be your process, not the advisories.

Thanks for the hard work @gregkh

Thorsten Leemhuis (acct. 1/4)

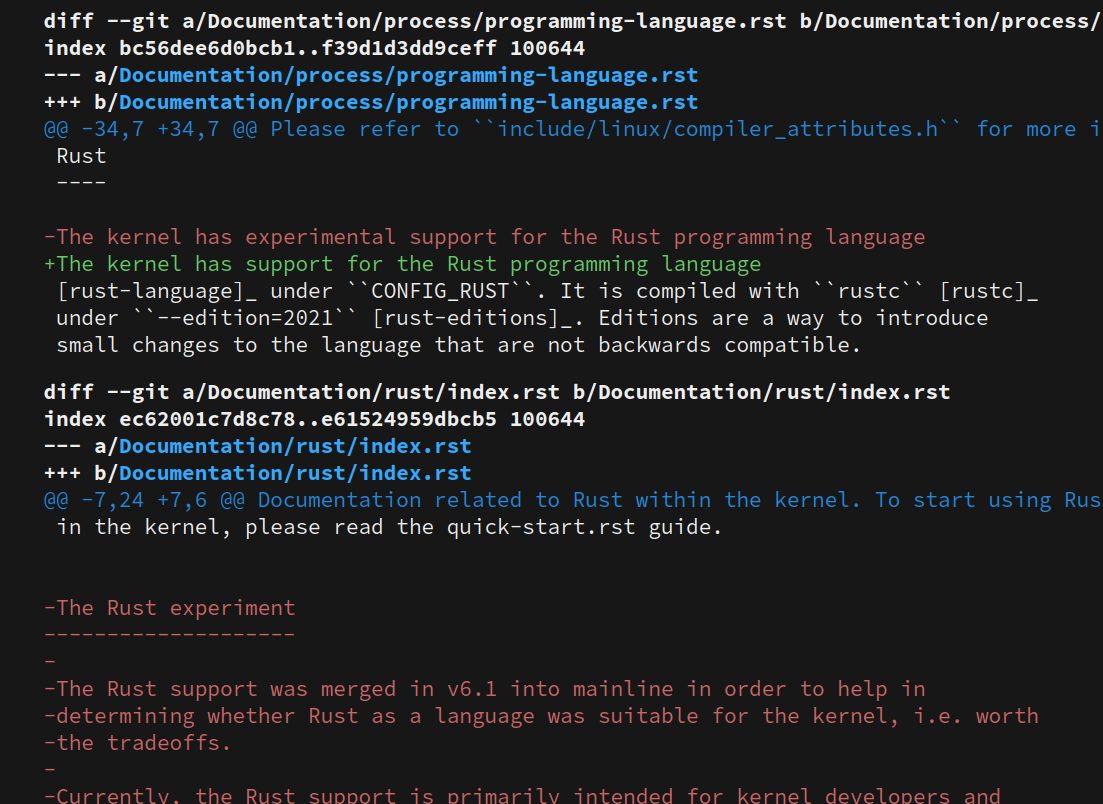

kernellogger@hachyderm.ioThe #Rust support in the #Linux #kernel is now officially a first class citizen and not considered experimental any more:

https://git.kernel.org/torvalds/c/9fa7153c31a3e5fe578b83d23bc9f185fde115da; for more details, see also: https://lwn.net/Articles/1050174/

This is one of the highlights from the main #RustLang for #LinuxKernel 7.0 that was merged a few hours ago ; for others, see https://git.kernel.org/torvalds/c/a9aabb3b839aba094ed80861054993785c61462c

Greg K-H

gregkhThe OpenSSF guide is great, and a huge shoutout to Python for paving the way for us all to be able to do this.

Greg K-H

gregkhGreg K-H

gregkhCPE doesn't work to attempt to describe a kernel configuration option as that is not what it was designed for. It was an attempt to make a machine-readable version/program definition, and even then, it does not work well anymore. PURL is the hope for the way out of that mess, and based on my conversations with the PURL developers at FOSDEM, there is hope that it will "soon" work for all open source software packages (right now it does not, so the kernel can not use it.)

European Open Source Academy

europeanOSacademy@fosstodon.orgThe 2nd Annual #EuropeanOpenSourceAwards have come to a close, but you can still revisit the best moments of the Awards Ceremony.

👇Watch the recording available here :

https://awards.europeanopensource.academy/awards/2026-recording-event

daniel:// stenberg://

bagder@mastodon.socialThe European Open Source Awards ceremony from January 29th, in one loooong recording with yours truly showing up several times.

Most blabbing at 1h24 and onward when @gregkh was up.

bert hubert 🇺🇦🇪🇺🇺🇦

bert_hubert@eupolicy.socialI am losing it at how many of my peers have forgotten what software engineering is. It is not typing in lines of code.

Kernel Recipes

KernelRecipes@fosstodon.orgSince we’re not superstitious, the 13th edition of KR will take place September 21–23, 2026 (black cats strictly forbidden during this edition — even on a leash… 😄). We hope to see loads of you there!

And because we want to keep offering the best possible conditions for three days of good vibes and community for everyone, feel free to support this edition by becoming a sponsor. All the info is here!

David Gerard

davidgerard@circumstances.runreading vibecoders talk about how great vibecoding is for engineering real things is like reading bitcoiners talk about how they think money works

mhoye

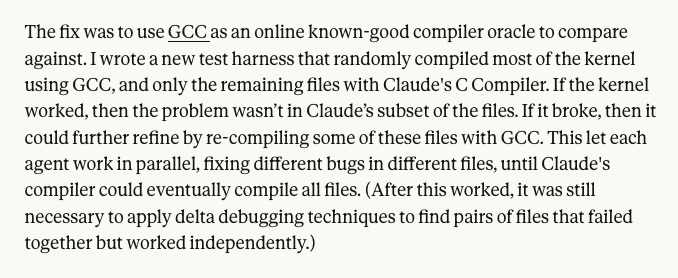

mhoye@cosocial.caBeing on Team Words Mean Things is difficult these days, particularly when multibillion-dollar companies put out breathless press releases saying "By using our massive language model, whose training data includes every version of GCC ever released, and having it autocorrect its own output by testing it against GCC, we managed to make a C compiler that mostly works for only $20,000 in a week and gosh I have so many feelings."

I mean, what the fuck are we even doing here.

☭. evie

vie@hachyderm.ioI think it's interesting how software engineers are (among?) the most eager working class group to replace themselves with LLMs.

It's interesting because LLMs do a worse job than us, we lose ability/skill to do our job the more we use it, lose our jobs, produce worse software, are less satisfied with our work, etc.

Yet so many of my peers seem to be super excited about and advocate for it, while other working class groups at least detest LLMs if not even consider organising themselves to protect their trade/jobs from LLMs.

Are we becoming the cops (read as: class traitors) of this techno-fascist dystopia?