Thorsten Leemhuis (acct. 1/4)

kernellogger@fosstodon.orgEver wondered why @torvalds coined the #Linux #kernel's "no regressions" rule? He just explained it again here: https://lore.kernel.org/all/CAHk-=wgtb7y-bEh7tPDvDWru7ZKQ8-KMjZ53Tsk37zsPPdwXbA@mail.gmail.com/

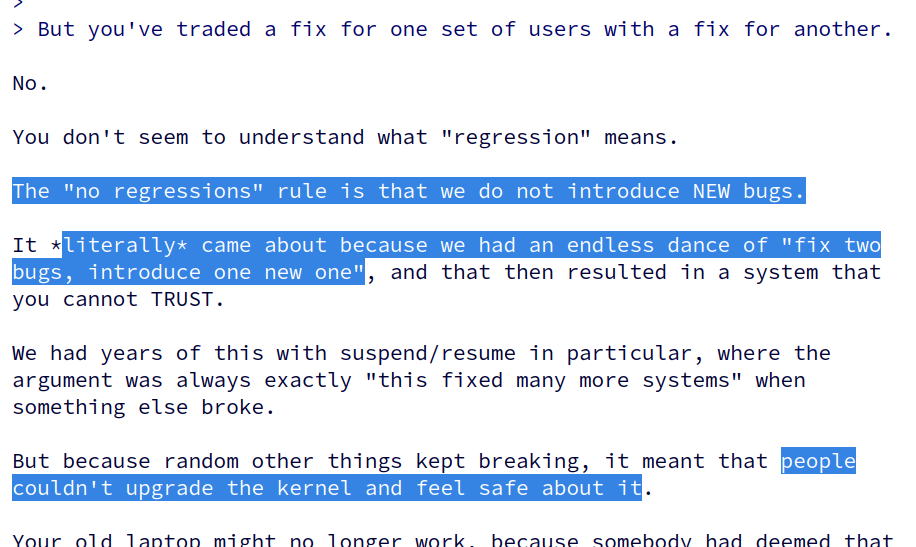

'"[…] I introduced that "no regressions" rule something like two decades ago, because people need to be able to update their kernel without fear of something they relied on suddenly stopping to work. […]"'

Follow the link for context and other statements that did not fit into a toot.

Oleksandr Natalenko, MSE

oleksandr@natalenko.name

@kernellogger @torvalds I'd appreciate having this rule being applied to the "stable" kernel too.

An imagined 'no regressions and totally perfect so never make a mistake' rule does not exist.

Christian Heusel

gromit@chaos.social@oleksandr @kernellogger @torvalds

Whats the issue? this is already the case to my understanding 😊

This does of course not mean that the stable kernel do not contain regressions

Thorsten Leemhuis (acct. 1/4)

kernellogger@fosstodon.orgit is applied to stable series as well; but accident happen (maybe too many, yes) and the way we need sometimes is not ideal[1].

That's a very long and complex story very short.

[1] it for example might take weeks for fixes to arrive in stable trees when the problem happens in mainline as well, as the fix then has to be mainlined first to avoid later regressions. Improving that process somehow (by temporary dropping patches or merging fixes quicker) is on my agenda.

Oleksandr Natalenko, MSE

oleksandr@natalenko.name

@gromit @kernellogger @torvalds Too many backporting is happening without proper review. For instance, for v6.9.3 there are ~400 commits pending ATM, the majority of those were autoselected.

Oleksandr Natalenko, MSE

oleksandr@natalenko.name

@kernellogger @torvalds The effect of this backporting race is quite opposite to what "stable" maintainers expected. I'm forced to stop using it and do backports on my own. I'm curious to see what's in your plans.

Thorsten Leemhuis (acct. 1/4)

kernellogger@fosstodon.orgFWIW, "autoselected" makes it sound like it was "AUTOSEL" that chose them; that is not the case for those patches afaics.

And what you mention here is a slightly different topic and thus could be seen as a straw man ( Linus talked about dealing with regressions, not about preventing them).

Christian

chris@social.uggs.io@kernellogger @torvalds Can #HomeAssistant please adapt this rule?

I am having more issues with Home Assistant issues than Linux kernel issues.

Jarkko Sakkinen

jarkkoAnd also, for instance with this fresh bus encryption feature for TPM chips, despite getting only a single performance regression report from a private user so far, I scaled "default y" down to "default X86_64" because that is the only platform where I've been able to test it successfully even with an old Intel Celeron NUC.

I would also emphasize this as a maintainer: even if the new feature is exciting, scale it down *eagerly* before it hits a release. It is always easier scale up later on, than scale down. I tend to label "uncertainty" as a regression despite not having better knowledge rather than "wait and see". I'd label any other behavior as pure ignorance: you knew that shit might hit the fan but did absolutely nothing.

Pavel Machek

pavel@chris @kernellogger @torvalds The first rule of Home Assistant is never update. The second rule of Home Assistant is never update.

Thorsten Leemhuis (acct. 1/4)

kernellogger@fosstodon.orgdifferent small solution would be needed afaics, among them:

* some of the fixes that wait till the merge window should not waited for it to distribute things better

* those that should wait ideally should be marked for "backport only after -rc4" or so) (see https://git.kernel.org/torvalds/c/2263c40e65255202f6f6d9dfa31d23906995ff7c)

* maybe also some distinction for "this is urgent, backport quickly", so others can wait longer

* ideally: make more subsystems use stable tags (and ignore anything not tagged by them)

Oleksandr Natalenko, MSE

oleksandr@natalenko.name

@kernellogger @torvalds linux-stable-v2? linux-steady? linux-really-stable?

Thorsten Leemhuis (acct. 1/4)

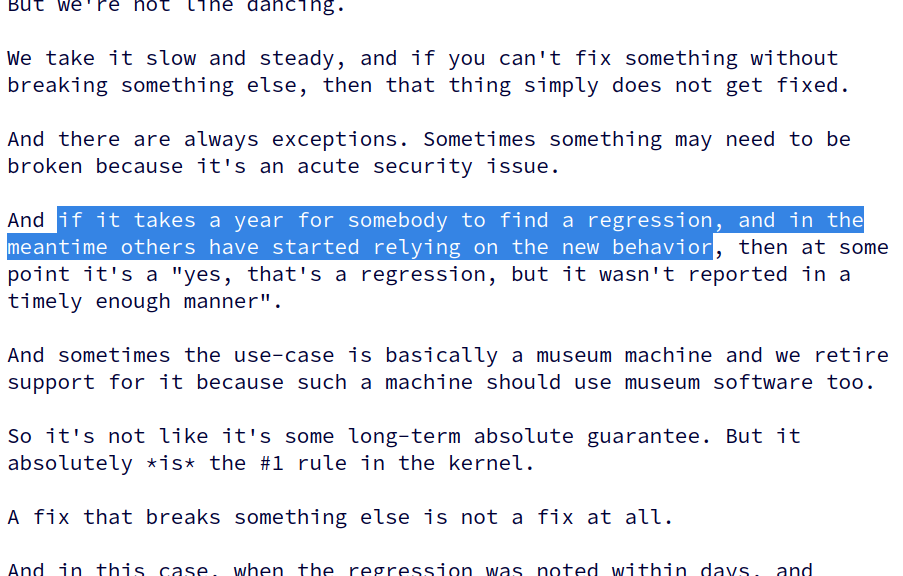

kernellogger@fosstodon.org2/ Also note that Linus' message[1] indirectly explains why you might not be able to claim "no regressions" when you only find a problem after updating from one longterm aka LTS #Linux #kernel series to a later one:

By then others might have started relying on the new behaviour, hence fixing the regression might be impossible without causing a regression for those other people – and then you might lose out.

[1] https://lore.kernel.org/all/CAHk-=wgtb7y-bEh7tPDvDWru7ZKQ8-KMjZ53Tsk37zsPPdwXbA@mail.gmail.com/

Thorsten Leemhuis (acct. 1/4)

kernellogger@fosstodon.orgThat's the wrong way IMHO, I prefer fixing the real problem then to work around them; and who would do all the work?

The thing that IMHO would help the most from my point would be a git tree with two branches for each relevant series, where one branch contains regression fixes that are queued for mainlining already while the other contains reverts and fixes under review (as mentioned already a week or two ago).

Aleksandra Fedorova

bookwar@fosstodon.org

I think that's quite a common case with the kernel development discussion.

Things which happen within kernel development and make a lot of sense within the context of that development, get misinterpreted by a crowd of spectators and then used to harass other projects.

And then those other projects get into a fight with the kernel, while the core issue is that you should not use any (good and bad) development rules, concept or ideas to harass other people.

Oleksandr Natalenko, MSE

oleksandr@natalenko.name

@vbabka @gromit @kernellogger @torvalds less stable because entropy grows, especially with random cherry-picking